Getting Started

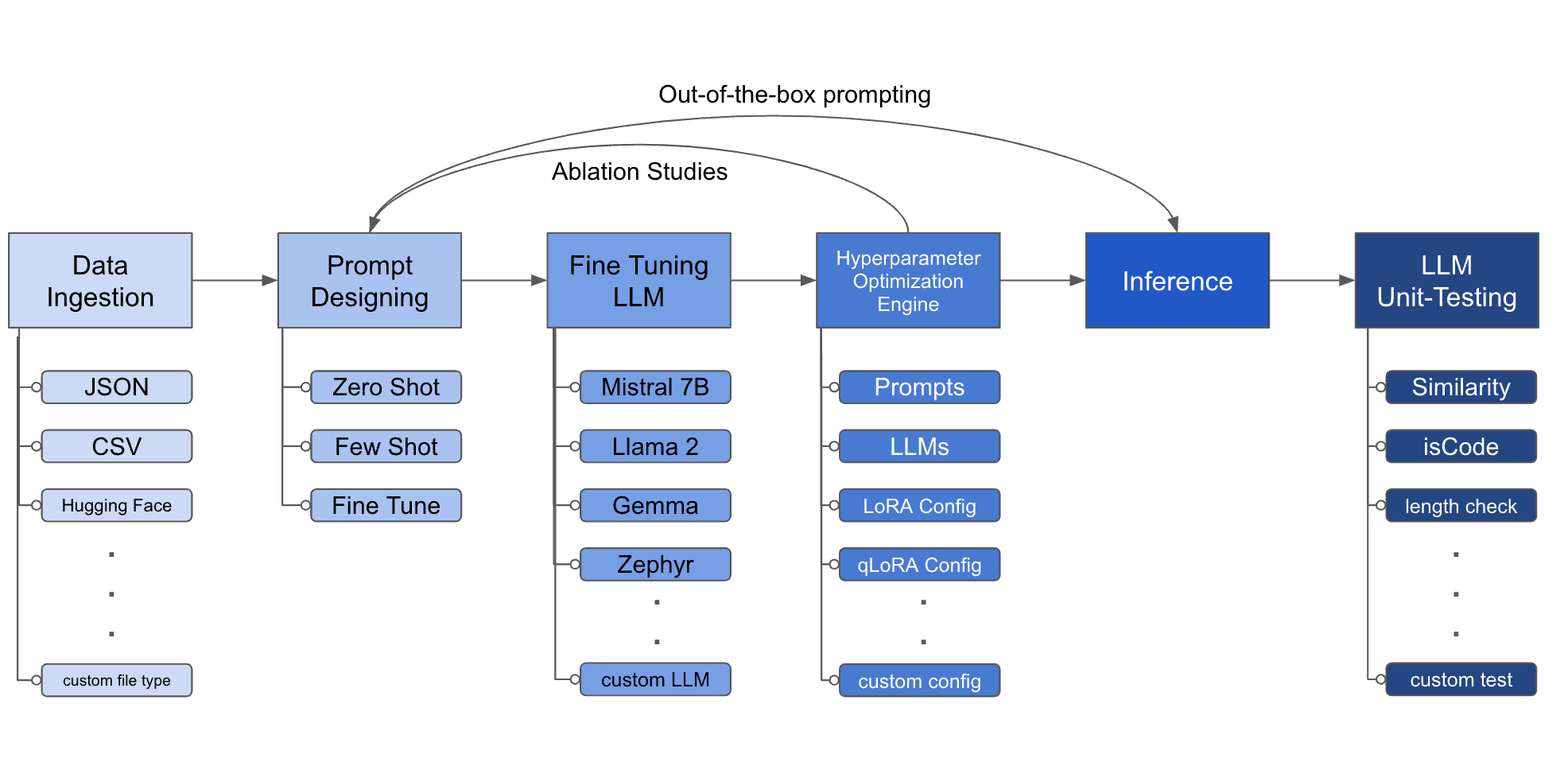

LLM Fine-tuning toolkit is a config-based CLI tool for launching a series of fine-tuning experiments and gathering their results. From one single yaml config file, users can define the following:

Data

- Bring a own dataset in any of

json,csv, andhuggingfaceformats. - Define prompt format and inject desired columns into the prompt.

Fine Tuning

- Configure desired hyperparameters for quantization and LoRA fine-tune.

Ablation

- Define multiple hyperparameter settings to iterate through.

Inference

- Configure desired sampling algorithm and parameters.

Testing

- Test desired properties such as length and similarity against reference text.

Content

This documentation page is organized in the following sections:

- Quick Start provides a quick overview of the toolkit and helps get started running own experiments.

- Configuration walks through all the changes that can be made to customize the experiments.

- Developer Guides goes over how to extend each component for custom use-cases and for contributing to this toolkit.

- API Reference details the underlying modules of this toolkit.

Installation

Clone Repository

git clone https://github.com/georgian-io/LLM-Finetuning-Hub.git cd LLM-Finetuning-Hub/

Install CLI

# build image docker build -t llm-toolkit . # launch container docker run -it llm-toolkit # with CPU docker run -it --gpus all llm-toolkit # with GPU

Running the Toolkit

This guide is intended to walk through the initial setup, explain the key components of the configuration, and offer advice on customizing the fine-tuning job.

First, make sure you have read the installation guide above and installed all the dependencies. Then, to launch a LoRA fine-tuning job, run the following command in your terminal:

python3 toolkit.py

This command initiates the fine-tuning process using the settings specified in the default YAML configuration file config.yaml.

save_dir: "./experiment/"

ablation:

use_ablate: false

# Data Ingestion -------------------

data:

file_type: "huggingface" # one of 'json', 'csv', 'huggingface'

path: "yahma/alpaca-cleaned"

prompt:

>- # prompt, make sure column inputs are enclosed in {} brackets and that they match your data

Below is an instruction that describes a task.

Write a response that appropriately completes the request.

### Instruction: {instruction}

### Input: {input}

### Output:

prompt_stub:

>- # Stub to add for training at the end of prompt, for test set or inference, this is omitted; make sure only one variable is present

{output}

test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

train_test_split_seed: 42

# Model Definition -------------------

model:

hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

quantize: true

bitsandbytes:

load_in_4bit: true

bnb_4bit_compute_dtype: "bf16"

bnb_4bit_quant_type: "nf4"

# LoRA Params -------------------

lora:

task_type: "CAUSAL_LM"

r: 32

lora_alpha: 16

lora_dropout: 0.1

target_modules:

- q_proj

- v_proj

- k_proj

- o_proj

- up_proj

- down_proj

- gate_proj

# Training -------------------

training:

training_args:

num_train_epochs: 5

per_device_train_batch_size: 4

optim: "paged_adamw_32bit"

learning_rate: 2.0e-4

bf16: true # Set to true for mixed precision training on Newer GPUs

tf32: true

sft_args:

max_seq_length: 1024

inference:

max_new_tokens: 1024

do_sample: True

top_p: 0.9

temperature: 0.8

Fine-tuning

Launch Fine-tuning Job

This guide will walk you through the initial setup, explain the key components of the configuration, and offer advice on customizing your fine-tuning job.

First, read the installation guide and install all the dependencies. Then, to launch a LoRA fine-tuning job, run the following command in your terminal:

python3 toolkit.py

Default Config

This command initiates the fine-tuning process using the settings specified in the default YAML configuration file config.yaml.

Artefact Outputs

This config will run fine-tuning and save the artefacts under directory ./experiment/[unique_hash]. Each unique configuration will generate a unique hash, so that our tool can automatically pick up where it left off. For example, if you need to stop the training before it finishes, you can relaunch the script and the program will automatically load the existing dataset that was generated in the directory, allowing you to resume where you left off instead of starting over from the beginning.

After the script finishes running you will see these distinct artifacts:

/config/config.yml: copy of the config file used for this experiment

/dataset/dataset.pkl: generated pkl file in huggingface Dataset format

/model/*: model weights saved using huggingface format

/results/results.csv: csv of prompt, ground truth, and predicted values

/qa/qa.csv: csv of quality assurance unit tests (e.g. vector similarity between gold and predicted output)

Custom Fine-tuning

You can modify config.yaml to launch custom training jobs. For a more detailed and nuanced treatment of what you can input into the config file, please reference the "Configuration" section of the documentation.

Loading Custom Datasets

Change the file_type and path under data in config.yml to point to your custom dataset. Ensure your dataset is properly formatted and adjust the prompt accordingly.

...

data:

file_type: "csv"

path: "path/to/your/dataset.csv"

prompt: "Your custom prompt template with {column_name} placeholders"

...

Changing LoRA Rank

Adjust the r and lora_alpha parameters in the LoRA section to experiment with different adaptation strengths.

... lora: r: 64 lora_alpha: 32 ...

Changing Base Model

Modify hf_model_ckpt to fine-tune a different base model. Ensure it is compatible with your task and make sure to specify the right modules to tune (different models may have different module names).

...

model:

hf_model_ckpt: "EleutherAI/gpt-neo-1.3B"

target_modules:

- c_attn

- c_proj

- c_fc

- c_mlp.0

- c_mlp.2

...

In the new config snippet for changing the model, we've updated the hf_model_ckpt to use the "EleutherAI/gpt-neo-1.3B" model instead of "NousResearch/Llama-2-7b-hf". We've also adjusted the target_modules to match the module names specific to the GPT-Neo architecture.

WARNING

Remember to carefully review the documentation and requirements of the new model you choose to ensure compatibility with your task and the toolkit.

Quality Assurance

Once the model is trained, verify its readiness for production. Quality Assurance testing specifically tailored for Language Model applications may be useful in verifying the model. This approach is distinct from conventional testing methods, as there’s currently no direct means of ensuring that a fine-tuned model meets enterprise standards. Moreover, developers have the flexibility to integrate their own tests into the process.

Available Tests

Generation Property

Generation Length

- Function: LengthTest

- Description: Determines the length of the summarized output and the input sentence. The output length is expected to exceed the input length, aligning with the specific use case.

POS Composition

- Description: Analyzes the grammar of the generated output, focusing on:

- Verb Percentage: Indicates the proportion of verbs present.

- Adjective Percentage: Indicates the proportion of adjectives present.

- Noun Percentage: Indicates the proportion of nouns present.

Word Similarity

Word Overlap

- Function: WordOverLapTest

- Description: Determines the length of the summarized output and the input sentence. The output length is expected to exceed the input length, aligning with the specific use case.

ROUGE Score

- Function: RougeScore

- Description: Computes the Rouge score for the output, providing insight into the quality of summarization.

Embedding Similarity

Jaccard Similarity

- Function: JaccardSimilarity

- Description: Calculates similarity by encoding inputs and outputs.

Dot Product (Cosine) Similarity

- Function: DotProductSimilarity

- Description: Computes the dot product between the encoded inputs and outputs.

General Structure

The configuration file has a hierarchical structure with the following main sections:

save_dir: The directory where the experiment results will be saved.- ablation: Settings for ablation studies.

- data: Configuration for data ingestion.

- model: Model definition and settings.

- lora: Configuration for LoRA (Low-Rank Adaptation).

- training: Settings for the training process.

- inference: Configuration for the inference stage.

Each section contains subsections and parameters that fine-tune the behavior of the toolkit.

Data

The data section defines how the input data is loaded and preprocessed. It includes the following parameters:

Parameters

file_type: The type of the input file, which can be "json", "csv", or "huggingface".path: The path to the input file or the name of the Hugging Face dataset.prompt: The prompt template used for formatting the input data. Use brackets to specify column names.prompt_stub: The prompt stub used during training (i.e. this will be omitted during inference for completion). Use brackets to specify the column name.train_size: The size of the training set, either as a float (proportion) or an integer (number of examples).test_size: The size of the test set, either as a float (proportion) or an integer (number of examples).train_test_split_seed: The random seed used for splitting the data into train and test sets.

Example

data:

file_type: "csv"

path: "path/to/your/dataset.csv"

prompt: >-

Below is an instruction that describes a task.

Write a response that appropriately completes the request.

### Instruction: {instruction}

### Input: {input}

### Output:

prompt_stub: >-

{output}

test_size: 0.1

train_size: 0.9

train_test_split_seed: 42

Model

The model section defines the base model and load settings. It includes the following parameters:

Parameters

hf_model_ckpt: The path or name of the pre-trained model checkpoint from the Hugging Face Model Hub.device_map: The device map for model parallelism. Set to "auto" for automatic device mapping or specify a custom device map.quantize: Boolean flag to enable quantization of the model weights; if true, then loads it with bitsandbytes configbitsandbytes: Settings for quantization using BitsAndBytesConfig object withintransformers.load_in_8bit: Flag to enable 8-bit quantization.llm_int8_threshold: Outlier threshold for 8-bit quantization.llm_int8_skip_modules: List of module names to exclude from 8-bit quantization.llm_int8_enable_fp32_cpu_offload: Flag to enable offloading of non-quantized weights to CPU.load_in_4bit: Flag to enable 4-bit quantization using bitsandbytes.bnb_4bit_compute_dtype: Compute dtype for 4-bit quantization.bnb_4bit_quant_type: Quantization data type for 4-bit quantization.bnb_4bit_use_double_quant: Flag to enable double quantization for 4-bit quantization.

Example

model:

hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

device_map: "auto"

quantize: true

bitsandbytes:

load_in_4bit: true

bnb_4bit_compute_dtype: "bf16"

bnb_4bit_quant_type: "nf4"

LoRA

The lora section configures the Low-Rank Adaptation (LoRA) settings. Supplied arguments are used to construct a peft LoraConfig object. It includes the following parameters:

Parameters

task_type: Type of transformer architecture; for decoder only - useCAUSAL_LM. for encoder-decoder - useSEQ_2_SEQ_LMr: The rank of the LoRA adaptation matrices.lora_alpha: The scaling factor for the LoRA adaptation.lora_dropout: The dropout probability for the LoRA layers.target_modules: The list of module names to apply LoRA to.fan_in_fan_out: Flag to indicate if the layer weights are stored in a (fan_in, fan_out) order.modules_to_save: List of additional module names to save in the final checkpoint.layers_to_transform: The list of layer indices to apply LoRA to.layers_pattern: The regular expression pattern to match layer names for LoRA application.

Examples

lora:

r: 32

lora_alpha: 16

lora_dropout: 0.1

target_modules:

- q_proj

- v_proj

- k_proj

- o_proj

- up_proj

- down_proj

- gate_proj

fan_in_fan_out: false

modules_to_save: null

layers_to_transform: null

layers_pattern: null

Advanced Settings

fan_in_fan_out

The fan_in_fan_out parameter is a boolean flag that indicates whether the weights of the layers being adapted are stored in a (fan_in, fan_out) order. This boolean flag is important for correctly applying the LoRA adaptation.

lora: fan_in_fan_out: true

In this example, setting fan_in_fan_out to true indicates that the weights of the layers being adapted are stored in a (fan_in, fan_out) order. If the weights are stored in a different order, you should set this parameter to false.

layers_to_transform

The layers_to_transform parameter is used to specify the indices of the layers to which LoRA should be applied. This parameter allows the user you to selectively apply LoRA to specific layers of the model.

lora: layers_to_transform: [2, 4, 6]

In this example, LoRA will be applied to the layers with indices 2, 4, and 6. The layer indices are zero-based, so the first layer has an index of 0, the second layer has an index of 1, and so on.

You can also specify a single layer index:

lora: layers_to_transform: 3

In this case, LoRA will be applied only to the layer with index 3.

layers_pattern

The layers_pattern parameter allows the user to specify a regular expression pattern to match the names of the layers to which LoRA should be applied. This provides a more flexible way to select layers based on their names.

lora: layers_pattern: "transformer\.h\.\d+\.attn"

In this example, the regular expression pattern transformer\.h\.\d+\.attn will match the names of the attention layers in a transformer model. The pattern will match layer names like transformer.h.0.attn, transformer.h.1.attn, and so on.

You can adjust the regular expression pattern to match the specific layer names in your model.

Training

The training section configures the training process. It includes two subsections:

Parameters

training_args: General training arguments such as the number of epochs, batch size, gradient accumulation steps, optimizer, learning rate, etc.num_train_epochs: Number of training epochs.per_device_train_batch_size: Batch size per training device.gradient_accumulation_steps: Number of steps for gradient accumulation.gradient_checkpointing: Flag to enable gradient checkpointing.optim: Optimizer to use for training.logging_steps: Number of steps between logging.learning_rate: Learning rate for the optimizer.bf16: Flag to enable BF16 mixed-precision training.tf32: Flag to enable TF32 mixed-precision training.fp16: Flag to enable FP16 mixed-precision training.max_grad_norm: Maximum gradient norm for gradient clipping.warmup_ratio: Ratio of total training steps used for a linear warmup.lr_scheduler_type: Type of learning rate scheduler.

sft_args: Arguments specific to the SFT (Supervised Fine-Tuning) process.max_seq_length: Maximum sequence length for input sequences.neftune_noise_alpha: Alpha parameter for NEFTUNE noise embeddings. If not None, activates NEFTUNE noise embeddings.

Example

training:

training_args:

num_train_epochs: 5

per_device_train_batch_size: 4

gradient_accumulation_steps: 4

gradient_checkpointing: true

optim: "paged_adamw_32bit"

logging_steps: 100

learning_rate: 2.0e-4

bf16: true

tf32: true

max_grad_norm: 0.3

warmup_ratio: 0.03

lr_scheduler_type: "constant"

sft_args:

max_seq_length: 5000

neftune_noise_alpha: null

Inference

The inference section sets the parameters for the inference stage. It includes:

Parameters

max_new_tokens: The maximum number of new tokens to generate.use_cache: Whether to use the cache during inference.do_sample: Whether to use sampling during inference.top_p: The cumulative probability threshold for top-p sampling.temperature: The temperature value for sampling.

Example

inference: max_new_tokens: 1024 use_cache: true do_sample: true top_p: 0.9 temperature: 0.8

Quality Assurance

🚧 The qa section is not yet directly configurable in the provided configuration file and is currently being integrated into the CLI toolkit. In the meantime, however, you can manually execute and extend the toolkit to include quality assurance tests by implementing custom test classes that inherit from LLMQaTest.

Ablation

The ablation section controls the settings for ablation studies. It includes:

Parameters

use_ablate: Whether to perform ablation studies.study_name: The name of the ablation study.

TIP

When use_ablate is set to true, the toolkit will generate multiple configurations by permuting the specified parameters. This allows you to compare different settings and their impact on the model's performance.

Example

ablation: use_ablate: true study_name: "ablation_study_1"

Putting it All Together

To create a custom configuration file, start by copying the provided template and modify the parameters according to your needs. Pay attention to the structure and indentation of the YAML file to ensure it is parsed correctly.

Once you have defined your configuration, you can run the toolkit with your custom settings. The toolkit will load the configuration file, preprocess the data, train the model, perform inference and optionally run quality assurance tests and ablation studies based on the configuration.

Remember to adjust the paths, prompts and other parameters to match the user’s specific use case. Experiment with different settings to find the optimal configuration for the task.

Example

Here's an example of a complete configuration file combining all the sections:

save_dir: "./experiments"

ablation:

use_ablate: true

study_name: "ablation_study_1"

data:

file_type: "csv"

path: "path/to/your/dataset.csv"

prompt: "Below is an instruction that describes a task. Write a response that appropriately completes the request. ### Instruction: {instruction} ### Input: {input} ### Output:"

prompt_stub: "{output}"

test_size: 0.1

train_size: 0.9

train_test_split_seed: 42

model:

hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

device_map: "auto"

quantize: true

bitsandbytes:

load_in_4bit: true

bnb_4bit_compute_dtype: "bf16"

bnb_4bit_quant_type: "nf4"

lora:

r: 32

lora_alpha: 16

lora_dropout: 0.1

target_modules:

- q_proj

- v_proj

- k_proj

- o_proj

- up_proj

- down_proj

- gate_proj

fan_in_fan_out: false

modules_to_save: null

layers_to_transform: null

layers_pattern: null

training:

training_args:

num_train_epochs: 5

per_device_train_batch_size: 4

gradient_accumulation_steps: 4

gradient_checkpointing: true

optim: "paged_adamw_32bit"

logging_steps: 100

learning_rate: 2.0e-4

bf16: true

tf32: true

max_grad_norm: 0.3

warmup_ratio: 0.03

lr_scheduler_type: "constant"

sft_args:

max_seq_length: 5000

neftune_noise_alpha: null

inference:

max_new_tokens: 1024

use_cache: true

do_sample: true

top_p: 0.9

temperature: 0.8

Extending Modules

The toolkit provides a modular and extensible architecture that allows developers to customize and enhance its functionality to suit their specific needs. Each component of the toolkit, such as data ingestion, fine tuning, inference and quality assurance testing, is designed to be easily extendable.

General Guidelines

There are various scenarios where you might want to extend a particular module of the toolkit. For example:

Data Ingestion: If you have a custom data format or source that is not supported out of the box, the Ingestor class can be extended to handle your specific data format. For instance, if you have data stored in a proprietary binary format, you can create a new subclass of Ingestor that reads and processes your binary data and converts it into a compatible format for the toolkit.

Fine-tuning: If you want to experiment with different fine-tuning techniques or modify the fine-tuning process, you can extend the Finetune class. For example, if you want to incorporate a custom loss function or implement a new fine-tuning algorithm, you can create a subclass of Finetune and override the necessary methods to include your custom logic.

Inference: If you need to modify the inference process or add custom post-processing steps, you can extend the Inference class. For instance, if you want to apply domain-specific post-processing to the generated text or integrate the inference process with an external API, you can create a subclass of Inference and implement your custom functionality.

Quality Assurance (QA) Testing: If you have specific quality metrics or evaluation criteria that are not included in the existing QA tests, you can extend the LLMQaTest class to define your own custom tests. For example, if you want to evaluate the generated text based on domain-specific metrics or compare it against a custom benchmark, you can create a new subclass of LLMQaTest and implement your custom testing logic.

By extending the toolkit's components, you can tailor it to your specific requirements and incorporate custom functionality that is not provided by default. This flexibility allows you to adapt the toolkit to various domains, data formats, and evaluation criteria.

In the following sections, we will provide detailed guidance on how to extend each component of the toolkit, along with code examples.

Extending Data Ingestor

To extend the data ingestor component, follow these steps:

- Open the file

src/data/ingestor.py. - Define a new class that inherits from the abstract base class

Ingestor. - Implement the required abstract method

to_datasetin your custom ingestor class. This method should load and preprocess the data from the specified source and return aDatasetobject. - Update the `get_ingestor` function to include your custom ingestor class based on a new file type or data source.

from src.data.ingestor import Ingestor

class CustomIngestor(Ingestor):

def __init__(self, path):

self.path = path

def to_dataset(self):

# Implement the logic to load and preprocess data from the specified path

...

def get_ingestor(data_type):

if data_type == "custom":

return CustomIngestor

...

Extending Finetuning

To extend the finetuning component, follow these steps:

- Create a new file in the

src/finetunedirectory, e.g.,custom_finetune.py. - In this file, define a new class that inherits from the abstract base class

Finetunefromsrc/finetune/finetune.py. - Implement the required abstract methods

finetuneandsave_modelin your custom finetuning class. - The

finetunemethod should take the training dataset and perform the finetuning process using the provided configuration. - The

save_modelmethod should save the fine tuned model to the specified directory. - Modify the

toolkit.pyfile to import your custom finetuning class and use it instead of the defaultLoRAFinetuneclass if needed.

from src.finetune.finetune import Finetune

class CustomFinetune(Finetune):

def finetune(self, train_dataset: Dataset):

# Implement your custom finetuning logic here

...

def save_model(self):

# Implement the logic to save the finetuned model

...

Extending Inference

To extend the inference component, follow these steps:

- Create a new file in the

src/inferencedirectory, e.g.,custom_inference.py. - In this file, define a new class that inherits from the abstract base class

Inferencefromsrc/inference/inference.py. - Implement the required abstract methods

infer_oneandinfer_allin your custom inference class. - The

infer_onemethod should take a single prompt and generate the model's prediction. - The

infer_allmethod should iterate over the test dataset and generate predictions for each example. - Modify the

toolkit.pyfile to import your custom inference class and use it instead of the defaultLoRAInferenceclass if needed.

from src.inference.inference import Inference

class CustomInference(Inference):

def infer_one(self, prompt: str):

# Implement the logic to generate a prediction for a single prompt

...

def infer_all(self):

# Implement the logic to generate predictions for the entire test dataset

...

Extending QA Test

To extend the quality assurance (QA) tests, follow these steps:

- Open the file

src/qa/qa_tests.py. - Define a new class that inherits from the abstract base class

LLMQaTestfromsrc/qa/qa.py. - Implement the required abstract property

test_nameand the abstract methodget_metricin your custom QA test class. - The

test_nameproperty should return a string representing the name of the test. - The

get_metricmethod should take the prompt, ground truth, and model prediction, and return a metric value (e.g.,float,int, orbool) indicating the test result. - Include instances of new

CustomQATestwhen instantiating theLLMTestSuiteobject.

from src.qa.qa import LLMQaTest

class CustomQATest(LLMQaTest):

@property

def test_name(self):

return "Custom QA Test"

def get_metric(self, prompt, ground_truth, model_prediction):

# Implement the logic to calculate the metric for the custom QA test

...

test_suite = LLMTestSuite([JaccardSimilarityTest(), CustomQATest()], prompts, ground_truths, model_preds)

Contribution Guide

Thank you for your interest in contributing to this open-source project.

Getting Started

To start contributing to the project, follow these steps:

- Fork the repository on GitHub.

- Clone your forked repository to your local machine.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them with descriptive commit messages.

- Push your changes to your forked repository.

- Submit a pull request to the main repository's main branch.

Before submitting a pull request, ensure that your code follows the project's coding style and passes all existing tests (🚧 work in progress).

Development Setup

To set up the development environment, follow these steps:

- Install the required dependencies using recommended installation methods.

- Run the existing tests to ensure everything is functioning correctly (🚧 work in progress).

Coding Guidelines

When contributing code to the project, please adhere to the following guidelines:

- Follow the PEP 8 style guide for Python code.

- Use

blackformatter - Use meaningful variable and function names that clearly convey their purpose.

- Write docstrings for classes, methods, and functions to provide clear documentation.

- Include inline comments to explain complex or non-obvious code sections.

- Break down large functions or methods into smaller, reusable components.

- Write unit tests for new features or bug fixes to ensure code correctness.

Contributing to Documentation

Improvements to the project's documentation (either the documentation site or docstrings in codebase) are appreciated. If you find any errors, inconsistencies or areas that need clarification, please feel free to submit a pull request with the necessary changes.

When contributing to the documentation, follow these guidelines:

- Use clear and concise language.

- Provide step-by-step instructions or examples when appropriate.

- Ensure that the documentation is up to date with the latest changes in the codebase.

- Maintain a consistent formatting and structure throughout the documentation.

Reporting Issues

If you encounter any bugs or issues, or have suggestions for improvements, please submit an issue on the project's GitHub repository. When submitting an issue, provide as much detail as possible, including:

- A clear and descriptive title.

- Steps to reproduce the issue or bug.

- Expected behavior and actual behavior.

- Any relevant error messages or logs.

- Your operating system and Python version.

PR Process

When submitting a pull request, please ensure that:

- Your code adheres to the project's coding guidelines.

- Your changes are well-tested and do not introduce new bugs.

- Your commit messages are descriptive and explain the purpose of the changes.

- You have updated the relevant documentation, if necessary.

- Once your pull request is submitted, the project maintainers will review your changes and provide feedback. Be prepared to make revisions or address any concerns raised during the review process.

Licensing

By contributing to this project, you agree that your contributions will be licensed under the LICENSE file in the repository.

Data

Ingestors

Ingestor

class

src.data.ingestor.Ingestor

( path: str )

The Ingestor class is an abstract base class for data ingestors.

Parameters

path: str- The path of the dataset.

to_dataset() -> Dataset

( )

An abstract method to be implemented by subclasses. Converts the input data to a Dataset object.

Returns

Dataset - The converted Dataset object.

JSON Ingestor

class

src.data.ingestor.JsonIngestor

( path: str )

The JsonIngestor class is a subclass of Ingestor for ingesting JSON data.

Parameters

path: str- The path of the JSON dataset.

to_dataset() -> Dataset

( )

Converts the JSON data to a Dataset object.

Returns

Dataset - The converted Dataset object.

CSV Ingestor

class

src.data.ingestor.CsvIngestor

( path: str )

The CsvIngestor class is a subclass of Ingestor for ingesting CSV data.

Parameters

path: str- The path of the CSV dataset.

to_dataset() -> Dataset

( )

Converts the CSV data to a Dataset object.

Returns

Dataset - The converted Dataset object.

Huggingface Ingestor

class

src.data.ingestor.HuggingfaceIngestor

( path: str )

The HuggingfaceIngestor class is a subclass of Ingestor for ingesting data from a HuggingFace dataset.

Parameters

path: str- The path or name of the HuggingFace dataset.

to_dataset() -> Dataset

( )

Converts the HuggingFace data to a Dataset object.

Returns

Dataset - The converted Dataset object.

Utilities

function

src.data.ingestor.get_ingestor

( data_type: str )

A function to get the appropriate ingestor class based on the data type.

Parameters

data_type: str- The type of data ("json", "csv", or "huggingface").

Returns

Ingestor - The corresponding ingestor class.

Dataset Generator

Dataset Generator

class

src.data.dataset_generator.DatasetGenerator

( file_type: str, path: str, prompt: str, prompt_stub: str, test_size: Union[float, int], train_size: Union[float, int], train_test_split_seed: int )

The DatasetGenerator class is responsible for generating and formatting datasets for training and testing.

Parameters

file_type: str- The type of input file ("json", "csv", or "huggingface").path: str- The path to the input file or HuggingFace dataset.prompt: str- The prompt template for formatting the dataset.prompt_stub: str- The prompt stub used during training.test_size: Union[float, int]- The size of the test set.train_size: Union[float, int]- The size of the training set.train_test_split_seed: int- The random seed for splitting the dataset.

get_dataset

( )

Generates and returns the formatted train and test datasets.

Returns

A tuple containing the train and test datasets.

save_dataset

( save_dir: str )

Saves the generated dataset to the specified directory.

Parameters

save_dir: str- The directory to save the dataset.

load_dataset_from_pickle

( save_dir: str )

Loads the dataset from a pickle file in the specified directory.

Parameters

save_dir: str- The directory containing the dataset pickle file.

Returns

A tuple containing the loaded train and test datasets.

Fine-tuning

Fine-tuning Classes

Finetune

class

src.finetune.finetune.Finetune

The Finetune class is an abstract base class for finetuning models.

finetune

( )

An abstract method to be implemented by subclasses. Fine-tunes the model.

save_model

( )

An abstract method to be implemented by subclasses. Saves the fine-tuned model.

LoRAFinetune

class

src.finetune.lora.LoRAFinetune

( config: Config, directory_helper: DirectoryHelper )

The LoRAFinetune class is a subclass of Finetune for finetuning models using LoRA (Low-Rank Adaptation).

Parameters

config: Config- The configuration object.directory_helper: DirectoryHelper- The directory helper object.

finetune

( train_dataset: Dataset )

Fine-tunes the model using the provided training dataset.

Parameters

train_dataset: Dataset- The training dataset.

save_model

( )

Saves the fine-tuned model.

Inference

Inference Classes

Inference

class

src.inference.inference.Inference

The Inference class is an abstract base class for performing inference.

infer_one

( prompt: str )

An abstract method to be implemented by subclasses. Performs inference on a single prompt.

Parameters

prompt: str- The input prompt.

infer_all

( )

An abstract method to be implemented by subclasses. Performs inference on all test examples.

LoRAInference

class

src.inference.lora.LoRAInference

( test_dataset: Dataset, label_column_name: str, config: Config, dir_helper: DirectoryHelper )

The LoRAInference class is a subclass of Inference for performing inference using LoRA models.

Parameters

test_dataset: Dataset- The test dataset.label_column_name: str- The name of the label column in the test dataset.config: Config- The configuration object.dir_helper: DirectoryHelper- The directory helper object.

infer_one

( prompt: str )

Performs inference on a single prompt and returns the generated text.

Parameters

prompt: str- The input prompt.

Returns

str - The generated text.

infer_all

( )

Performs inference on all test examples in test_dataset and saves the results in a csv.

Quality Assurance

Quality Assurance Tests

LLMQaTest

class

src.qa.qa.LLMQaTest

The LLMQaTest class is an abstract base class for defining quality assurance tests for language models.

test_name

( )

An abstract property to be implemented by subclasses. Returns the name of the test.

get_metric

( prompt: str, ground_truth: str, model_pred: str )

Computes the metric for the test based on the input prompt, the ground truth output, and the model's predicted output.

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

The computed metric, which can be a float, an int, or a bool.

Length Test

class

src.qa.qa_tests.LengthTest

( )

A quality assurance test that measures the absolute difference in length between the ground truth text and the model's prediction. This test aims to evaluate the summary length consistency, providing a straightforward metric for assessing how closely the model's output matches the expected length of the ground truth.

test_name

( ) -> str

Returns

str - "Summary Length Test"

get_metric

( prompt:str, ground_truth: str, model_pred: str ) -> int

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

int - the absolute difference in character length between the ground truth and model prediction

Jaccard Similarity

class

src.qa.qa_tests.JaccardSimilarityTest

( )

Evaluates the similarity between the ground truth text and the model's prediction using the Jaccard Similarity measure. This metric calculates the size of the intersection divided by the size of the union of the sample sets, providing insight into how similar the predicted text is to the ground truth in terms of unique words.

test_name

( ) -> str

Returns

str - "Jaccard Similarity"

get_metric

(prompt: str, ground_truth: str, model_prediction: str) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_prediction: str- The model's predicted output.

Returns

float - the Jaccard Similarity score between the ground truth and model prediction.

Dot Product Similarity

class

src.qa.qa_tests.DotProductSimilarityTest

( )

Evaluates the semantic similarity between the ground truth and the model's prediction by computing the dot product between their sentence embeddings. This test aims to capture the closeness of the meanings of the two texts, providing a more nuanced understanding of the model's performance in preserving semantic content.

test_name

( ) -> str

Returns

str - "Semantic Similarity"

get_metric

(prompt: str, ground_truth: str, model_prediction: str) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the dot product similarity score, indicating the degree of semantic similarity between the ground truth and model prediction.

ROUGE Score

class

src.qa.qa_tests.RougeScoreTest

( )

Measures the Rouge score, specifically the precision of Rouge-1, between the model's prediction and the ground truth. This test focuses on the overlap of unigrams, providing a metric for assessing the model's ability to reproduce key words and phrases from the ground truth.

test_name

( ) -> str

Returns

str - "Rouge Score"

get_metric

( prompt: str, ground_truth: str, model_pred: str ) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the precision component of the Rouge-1 score, reflecting the proportion of the model's unigrams found in the ground truth.

Word Overlap

class

src.qa.qa_tests.WordOverlapTest

( )

A test that calculates the percentage of word overlap between the ground truth and the model's prediction, after removing common stop words. This metric provides a straightforward measure of content similarity, emphasizing the shared vocabulary while ignoring frequent but less meaningful words.

test_name

( ) -> str

Returns

str - "Word Overlap Test"

get_metric

( prompt: str, ground_truth: str, model_pred: str ) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the percentage of overlap in significant words between the ground truth and model prediction, indicating content overlap.

Verb Composition

class

src.qa.qa_tests.VerbPercent

( )

Assesses the composition of the model's prediction by calculating the percentage of verbs within the text. This test provides insights into the dynamic versus static nature of the content generated by the model, with a higher proportion of verbs potentially indicating more active and vivid descriptions.

test_name

( ) -> str

Returns

str - "Verb Composition"

get_metric

(prompt: str, ground_truth: str, model_prediction: str) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the percentage of words classified as verbs in the model prediction, shedding light on the action-oriented nature of the generated text.

Adjective Composition

class

src.qa.qa_tests.AdjectivePercent

( )

Focuses on the proportion of adjectives in the model's prediction to evaluate the descriptiveness and detail within the generated text. This test helps gauge how well the model captures and conveys detailed attributes and qualities of subjects in its outputs.

test_name

( ) -> str

Returns

str - "Adjective Composition"

get_metric

( prompt: str, ground_truth: str, model_pred: str ) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the proportion of adjectives, offering insight into the richness and descriptiveness of the model's language.

Noun Composition

class

src.qa.qa_tests.NounPercent

( )

Evaluates the model's prediction by calculating the percentage of nouns, providing a measure of how substantially the model generates content with tangible subjects and entities. This test can indicate the model's ability to maintain focus on key topics and to populate its narratives with relevant nouns.

test_name

( ) -> str

Returns

str - "Noun Composition"

get_metric

( prompt: str, ground_truth: str, model_pred: str ) -> float

Parameters

prompt: str- The input prompt.ground_truth: str- The ground truth output.model_pred: str- The model's predicted output.

Returns

float - the percentage of nouns in the text, reflecting on the subject matter density and relevance in the generated content.

Test Runner

LLMTestSuite

class

src.qa.qa.LLMTestSuite

( tests: List[LLMQaTest], prompts: List[str], ground_truths: List[str], model_preds: List[str] )

The LLMTestSuite class represents a suite of quality assurance tests for language models.

Parameters

tests: List[LLMQaTest]- A list of LLMQaTest objects representing the tests to run.prompts: List[str]- A list of input prompts.ground_truths: List[str]- A list of ground truth outputs.model_preds: List[str]- A list of model's predicted outputs.

run_tests

( )

Runs all the tests in the suite and returns the results as a dictionary mapping test names to their corresponding metrics.

Returns

A dictionary mapping test names to their corresponding metrics, which can be floats, ints, or bools.

print_test_results

( )

Prints the test results in a tabular format.

save_test_results

( path: str )

Saves the test results to a CSV file.

Parameters

path: str- The path to save the CSV file.

User Interface

User Interface Classes

Generic UI

class

src.ui.ui.UI

The UI class is an abstract base class for user interface components. This class outlines a framework for displaying information and facilitating interaction with users across various stages of the toolkit's execution, including dataset creation, fine-tuning, inference, and quality assurance testing.

This component is designed to be subclassed, with specific implementations providing concrete methods for all interactions required by the toolkit. These interactions could range from input collection for dataset specifications to displaying fine-tuning progress, presenting inference results, and summarizing quality assurance test outcomes.

Utilities

Save Utils

DirectoryList

class

src.utils.save_utils.DirectoryList

( save_dir:str, config_hash:str )

The DirectoryList class represents a structured approach to managing directories for saving various components of experiment results, ensuring organized storage and easy access to different types of data generated during the experiment's lifecycle.

Class Attributes

save_dir: str- The base directory where all experiment results are saved. It acts as the root directory for storing the outputs of different experiments.config_hash: str- A unique identifier for each experiment configuration, used to create separate subdirectories undersave_dirfor different experiment runs.

Properties

experiment: str- Returns the path to the specific experiment directory, combiningsave_dirwithconfig_hash. This directory acts as the container for all data related to a particular experiment configuration.config: str- Returns the path to the configuration file within the experiment directory. This file stores the experiment's settings and parameters.dataset: str- Returns the path to the directory where dataset files are stored, allowing for separation between different types of data used or generated by the experiment.weights: str- Returns the path to the directory where model weights are saved, facilitating easy access to trained models.results: str- Returns the path to the directory where experiment results, such as metrics or output files, are stored.qa: str- Returns the path to the directory dedicated to quality assurance or testing results, ensuring that evaluation outputs are organized and retrievable.

DirectoryHelper

class

src.utils.save_utils.DirectoryHelper

( config_path: str, config: Config )

The DirectoryHelper class provides helper methods for managing directories and saving configurations, facilitating the organization and preservation of experiment settings and outcomes.

Parameters

config_path: str- The path to the configuration file.config: Config- The configuration object.

Attributes

config_path: str- The path to the configuration file. This attribute stores the location of the main configuration file used by the experiment, enabling theDirectoryHelperto access and manage experiment configurations.config: Config- The configuration object. This attribute holds the actual configuration settings loaded from the configuration file, encapsulating all experiment parameters and settings in an accessible object format.sqids: Sqids- An instance of theSqidsclass, which is used for managing unique config IDs to track experiments.save_paths: DirectoryList- Represents the structured list of directory paths associated with the current experiment.

save_config() -> None

( )

Saves the configuration to a file, ensuring that experiment parameters are documented and reproducible.

Ablation Utils

class

src.utils.ablation_utils.generate_permutations

( yaml_dict: dict, model: BaseModel )

Generates permutations of a YAML dictionary based on specified ablations. This function is pivotal for creating variations in configurations or datasets, enabling thorough testing and exploration of different scenarios.

Parameters

yaml_dict: dict- The YAML dictionary containing the definitions for ablations.model: BaseModel- The pydanticBaseModelobject, which might be used to reference or validate the permutations against specific criteria or configurations.

Returns

A list of permuted dictionaries, each representing a variation of the original YAML dictionary based on the defined ablations, facilitating extensive testing or data variation analysis.

Pydantic Models

Main Config

Config

class

src.pydantic_models.config_model.Config

( save_dir: Optional[str], ablation: AblationConfig, accelerate: Optional[bool], data: DataConfig, model: ModelConfig, lora: LoraConfig, training: TrainingConfig, inference: InferenceConfig )

Represents the overall configuration for the toolkit, including all necessary settings and parameters required for its operation. This configuration encapsulates everything from data handling and model specification to training and inference settings, as well as ablation studies and optimization flags.

Attributes

save_dir: Directory for saving outputs.ablation: Configuration for ablation studies.accelerate: Enables multi-GPU training if set to True.data: Data ingestion configuration.model: Model configuration, including specifics for handling and optimization.lora: Configuration for LoRA (Low-Rank Adaptation) adjustments.training: Training configurations, including batch sizes, learning rates, and more.inference: Inference settings, such as token limits and sampling strategies.

Data Config

DataConfig

class

src.pydantic_models.config_model.DataConfig

( file_type: Literal['json', 'csv', 'huggingface'], path: Union[FilePath, HfModelPath], prompt: str, prompt_stub: str, train_size: Optional[Union[float, int]], test_size: Optional[Union[float, int]], train_test_split_seed: int )

Represents the configuration for data ingestion, specifying how data is loaded, prepared, and split for training and testing. This component is crucial for ensuring that the data feeding into the model is correctly formatted and segmented.

Attributes

file_type: The format of the dataset file (JSON, CSV, or a HuggingFace dataset).path: Path to the dataset or HuggingFace model.prompt: Template for generating model inputs.prompt_stub: Template fragment used during training.train_size: Specifies the size of the training dataset.test_size: Specifies the size of the test dataset.train_test_split_seed: Seed for reproducible train/test splits.

Represents the configuration for data ingestion.

Model Config

ModelConfig

class

src.pydantic_models.config_model.ModelConfig

( hf_model_ckpt: Optional[str], device_map: Optional[str], quantize: Optional[bool], bitsandbytes: BitsAndBytesConfig )

Details the configuration for the model, including paths to pre-trained models, device mapping for training, and options for quantization to optimize model performance and resource usage.

Attributes

hf_model_ckpt: Path or identifier for a HuggingFace model checkpoint.device_map: Specifies how the model should be distributed across available devices.quantize: Enables model quantization for performance optimization.bitsandbytes: Settings for BitsAndBytes quantization strategies.

BitsAndBytesConfig

class

src.pydantic_models.config_model.BitsAndBytesConfig

( load_in_8bit: Optional[bool], llm_int8_threshold: Optional[float], llm_int8_skip_modules: Optional[List[str]], llm_int8_enable_fp32_cpu_offload: Optional[bool], llm_int8_has_fp16_weight: Optional[bool], load_in_4bit: Optional[bool], bnb_4bit_compute_dtype: Optional[str], bnb_4bit_quant_type: Optional[str], bnb_4bit_use_double_quant: Optional[bool] )

Represents the configuration for BitsAndBytes quantization, offering detailed control over how models are quantized to improve performance and reduce memory footprint. These settings allow for advanced optimization techniques, including 8-bit and 4-bit quantization, with options for handling outliers and mixed precision.

Attributes

load_in_8bit: Enable 8-bit quantization with specifics on handling outliers and module exceptions.llm_int8_threshold: Threshold for outlier detection in 8-bit quantization.llm_int8_skip_modules: Modules to exclude from 8-bit quantization.llm_int8_enable_fp32_cpu_offload: Offloads part of the model to CPU in fp32 to save memory.llm_int8_has_fp16_weight: Allows 16-bit weights in conjunction with 8-bit quantization.load_in_4bit: Enable 4-bit quantization for further size and speed optimization.bnb_4bit_compute_dtype: Defines the computational datatype in 4-bit quantization.bnb_4bit_quant_type: Specifies the quantization datatype in 4-bit layers.bnb_4bit_use_double_quant: Enables nested quantization for potentially higher efficiency.

LoRA Config

LoraConfig

class

src.pydantic_models.config_model.LoraConfig

( r: Optional[int], task_type: Optional[str], lora_alpha: Optional[int], bias: Optional[str], lora_dropout: Optional[float], target_modules: Optional[List[str]], fan_in_fan_out: Optional[bool], modules_to_save: Optional[List[str]], layers_to_transform: Optional[Union[List[int], int]], layers_pattern: Optional[str] )

Details the configuration for applying LoRA (Low-Rank Adaptation) to a model, enhancing its ability to adapt to new tasks without extensive retraining. LoRA settings determine how and where these adaptations are applied within the model architecture.

Attributes

r: Rank for the LoRA adaptation, affecting the number of trainable parameters.task_type: Indicates the model's task type during training to guide LoRA adjustments.lora_alpha: Scaling factor for LoRA parameters.bias: Specifies how biases are handled in LoRA-adapted layers.lora_dropout: Dropout rate for LoRA layers, helping prevent overfitting.target_modules: Model components targeted for LoRA adaptation.fan_in_fan_out: Adjusts weight shape assumptions for compatibility with LoRA.modules_to_save: Explicitly marks non-LoRA modules for retention and training.layers_to_transform: Identifies specific layers for LoRA transformation.layers_pattern: Pattern matching for selecting layers for adaptation.

Training Config

TrainingConfig

class

src.pydantic_models.config_model.TrainingConfig

( training_args: TrainingArgs, sft_args: SftArgs )

Encapsulates the configuration for the training process, including both general training parameters and settings specific to Supervised Fine-Tuning (SFT). This dual configuration approach allows for fine-grained control over the training regimen.

Attributes

training_args: Core training arguments, covering epochs, batch sizes, and optimization strategies.sft_args: Supervised Fine-Tuning arguments, providing additional options for fine-tuning performance.

TrainingArgs

class

src.pydantic_models.config_model.TrainingArgs

( num_train_epochs: Optional[int], per_device_train_batch_size: Optional[int], gradient_accumulation_steps: Optional[int], gradient_checkpointing: Optional[bool], optim: Optional[str], logging_steps: Optional[int], learning_rate: Optional[float], bf16: Optional[bool], tf32: Optional[bool], fp16: Optional[bool], max_grad_norm: Optional[float], warmup_ratio: Optional[float], lr_scheduler_type: Optional[str] )

Defines the core training parameters for the model, covering every aspect from epoch counts to specific hardware optimizations. These arguments provide a comprehensive toolkit for customizing the training process to suit different models, datasets, and hardware configurations.

Attributes

num_train_epochs: Specifies the total number of epochs for training.per_device_train_batch_size: Sets the batch size for each training device.gradient_accumulation_steps: Determines the number of steps to accumulate gradients before updating model parameters.gradient_checkpointing: Enables memory-efficient gradient checkpointing.optim: Chooses the optimizer for training.logging_steps: Configures the frequency of logging for training metrics.learning_rate: Sets the initial learning rate.bf16: Activates BF16 training for compatible hardware.tf32: Enables TF32 precision on NVIDIA Ampere GPUs.fp16: Engages FP16 precision for faster computation and reduced memory usage.max_grad_norm: Caps the norm of the gradients to prevent explosion.warmup_ratio: Adjusts the learning rate as a proportion of the total training steps for a gradual start.lr_scheduler_type: Specifies the learning rate scheduler to be used.

SftArgs

class

src.pydantic_models.config_model.SftArgs

( max_seq_length: Optional[int], neftune_noise_alpha: Optional[float] )

Captures the specific configurations for Supervised Fine-Tuning (SFT), including parameters that affect how models process input sequences and apply NEFTune noise embeddings. These settings help tailor the fine-tuning process to the instructional nuances of the dataset.

Attributes

max_seq_length: Limits the length of input sequences to the model.neftune_noise_alpha: Activates NEFTune noise embeddings, which can significantly enhance model performance for instruction-based fine-tuning by introducing a controlled amount of noise into the embeddings.

Inference Config

InferenceConfig

class

src.pydantic_models.config_model.InferenceConfig

( max_new_tokens: Optional[int], use_cache: Optional[bool], do_sample: Optional[bool], top_p: Optional[float], temperature: Optional[float], epsilon_cutoff: Optional[float], eta_cutoff: Optional[float], top_k: Optional[int] )

Defines the parameters governing the inference phase, focusing on output generation and sampling behavior. These settings allow users to balance between creativity, diversity, and fidelity to the input prompt.

Attributes

max_new_tokens: Limits the number of new tokens generated.use_cache: Enables caching for efficiency during generation.do_sample: Activates stochastic sampling for output generation.top_p: Controls the nucleus sampling threshold.temperature: Adjusts the sharpness of the probability distribution.epsilon_cutoff: Introduces cutoffs to refine sampling strategies.eta_cutoff: Further refines sampling cutoffs for nuanced control.top_k: Limits the sampling pool to the top-k most likely tokens.

Ablation Config

AblationConfig

class

src.pydantic_models.config_model.AblationConfig

( use_ablate: Optional[bool], study_name: Optional[str] )

Specifies whether ablation studies are to be conducted and, if so, under what overarching study name. This configuration is crucial for systematically exploring the impact of various model components and settings on performance.

Attributes

use_ablate: Enables the execution of ablation studies.study_name: Provides a label for grouping related ablation experiments.