How Vibe Coding is Changing the Economics of Software Development

(Note: This article was originally published in the June 2025 edition of Cyber Defense Magazine).

Introduction

Software development has changed dramatically in recent years. Developers have moved from copying code from Stack Overflow to using ChatGPT for code suggestions, using Integrated Development Environments (IDEs) with AI-powered autocompletion, to now prompting large language models (LLMs) to generate entire applications. This shift is transforming how engineering teams work and is reshaping the economics of software creation and cybersecurity.

In this article, I’ll explore how AI is shaping code editors, generators, and developer workflows. I’ll examine the landscape of AI coding tools, the forces driving their evolution, and the implications of these changes—from democratizing development to addressing cybersecurity risks. By understanding these trends, organizations can better prepare for the opportunities and challenges of this new era.

The New Era of AI-Driven Development

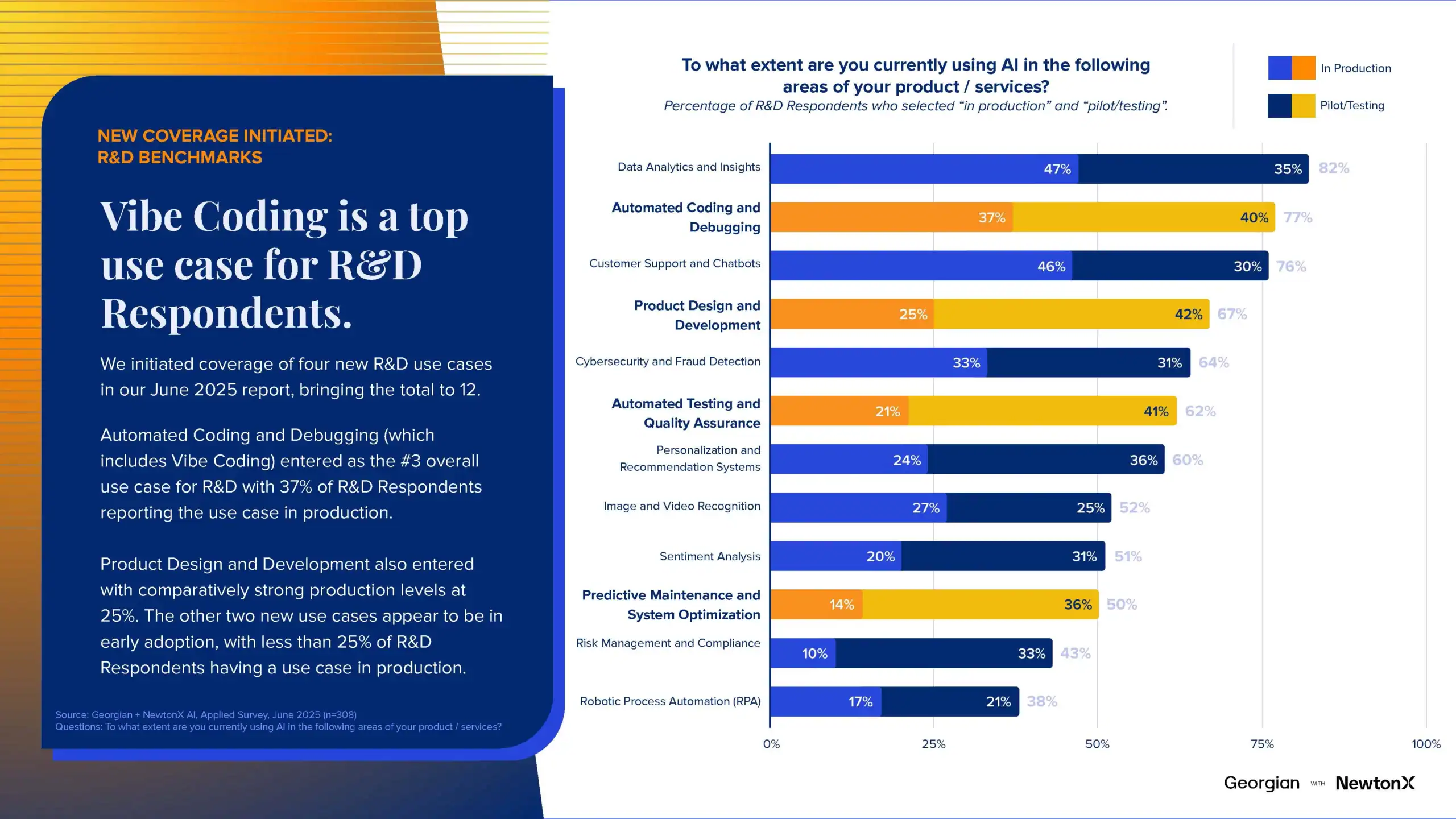

According to Georgian and NewtonX’s June 2025 “AI, Applied, Wave 2” Benchmarking report, Automated Coding and Debugging (or ‘vibe coding’) use cases are now a top use case amongst surveyed R&D teams. 77% of surveyed R&D leaders have indicated that they have an Automated Coding and Debugging use case either in production (37%) or in pilot/testing (40%).

As a result, more than 50% of R&D leaders surveyed report seeing increases in developer metrics like development velocity, code quality metrics and deployment frequency. However, surveyed R&D leaders were less likely to see positive results on metrics tied to product reliability, including Cycle Time, Lead Time, and Mean Time to Restore suggesting that increased automated coding may cause challenges in other areas of the development lifecycle.

Vibe coding enables developers to offload significant cognitive effort by allowing LLMs to take the lead in writing, debugging, and testing code. With vibe coding, users provide natural language prompts to describe their desired outcomes, and LLMs respond by generating code snippets, assisting with debugging, and validating functionality. This approach is making coding more intuitive, accessible, and efficient—even for individuals without traditional programming expertise.

AI coding tools are now leveraging advanced multi-step agentic architectures to manage entire development cycles. These tools can autonomously generate code, execute it in shells, verify its correctness, and request human validation only when necessary. Tasks that previously required weeks of manual effort are now completed in hours, constrained only by LLM token limits and GPU processing speeds.

For software engineers, this shift means that much of the traditional development workflow—reading API documentation, writing tests, code implementation, and verification—can be, and is being, automated. Rather than writing every line of code themselves, developers can now collaborate with AI tools to refine and optimize outputs.

Landscape of AI Coding Tools

Despite the explosive growth of AI-powered coding solutions, many tools converge around a core set of features, such as code completion and code understanding. While some tools aim for autonomous full-application generation, others remain IDE-first in their approach. At time of writing, product differentiation is partly being driven by tools designed for different developer audiences (front-end, back-end, etc), and different interfaces and form factors (stand-alone IDE, extension, CLI-tool, etc).

Open-source attempts at full automation have yet to reach high reliability (e.g., no major AI model has surpassed 50% auto-resolution on SWEbench’s Full Test). Nevertheless, ongoing advancements in foundation models and agentic architectures are driving optimism around greater adoption and improved reliability.

Two Forces Driving Innovation

Foundation Model Improvements

Progress in foundational models directly impacts the efficacy of AI coding tools. As models improve correctness, latency and cost, iteration speed for users materially improves. Competitors are racing to dominate public benchmarks like SWEbench, where correctness outweighs inference speed due to the high value of engineering tasks.

Agentic Architectures

Beyond coding, LLMs are being integrated into agentic systems. These architectures break down problems into manageable tasks, allowing LLMs to execute complex workflows through “tool calling,” memory management, and retrieval-augmented generation (RAG). By packaging multiple agents into cohesive systems, developers now have access to higher levels of abstraction, enabling tools that are more powerful and flexible than ever before. Many IDEs have even embraced model-context-protocol servers (you can think of them as mini-agent servers), which enable agents to call other agents – to achieve even more sophisticated workflows and tasks.

Implications for Software Businesses and Cybersecurity

While the rapid adoption of AI coding tools brings opportunities for software business, there are some barriers to adoption. Some opportunities and corresponding challenges for software businesses include:

Increased Code Production

The ability to generate correct code in hours rather than weeks has dramatically reduced the cost of production. However, this also means that the amount of code being generated is growing exponentially, increasing the attack surface for cyber threats. Traditional security programs and approaches still apply, but may require increased automation, discovery of applications, and a federated secure software supply-chain in order to be effective. The ideal ‘shifted-left’ state involves code-generation tools becoming security-aware and environment-aware so it does not produce offending insecure code and applications – and we’re still in the early stages of this.

Accessibility for Non-Engineers

Technology-adjacent professionals, such as project managers and product developers, can now produce code without formal engineering training. This democratization of code creation empowers a broader range of individuals to contribute to software development, fostering innovation and speeding up project timelines. However, it also introduces significant security risks, such as the potential generation of insecure code and vulnerabilities without the oversight of a skilled reviewer. Many organizations take the approach of hard isolation of applications from core systems and deploy ‘prototype’ or ‘marketing’ systems. But to enable both speed and security, this approach may be labour and cost intensive as it requires establishing the plumbing to spin up secure and ephemeral environments in a fully automated way.

Cybersecurity Economics

It would not be far-fetched to extrapolate that the cost to produce software is materially decreasing from a labor perspective, particularly if an engineer can produce something in days that used to take weeks or months. Unfortunately, the same math applies to bad actors using modern code generating tools to generate offensive code to exploit vulnerabilities. The decrease in cost and effort to generate code may result in an increase in the cost to defend systems. Startups, which often prioritize speed over security, may face increased risks as they scale. Large enterprises, which dominate cybersecurity spending today, may need to invest in solutions that account for the sheer volume of new code being produced.

The Path Forward

In my opinion, with the proliferation of AI-generated code, companies must prioritize scaling cybersecurity defenses to match the velocity of development. Open-source tools remain a vital equalizer for startups, but a broader shift is needed to improve the accessibility and affordability of security solutions for small and medium businesses. Without this, breaches—whether detected or not—are likely to escalate in frequency and impact.

Other key approaches that organizations can take to strengthen their cybersecurity practices in the age of vibe coding and agentic AI include:

- Maintaining tight access controls by ensuring that only authorized human and non-human entities are able to interact with AI models,

- Ensuring strong data governance practices by implementing strict rules around what data can be used and accessed by tools and AI agents, and

- Using monitoring and evaluation tools to monitor AI systems for anomalies like malicious prompts or unexpected model outputs.

Furthermore, organizations can leverage LLMs to effectively enforce their visions, policies, and standards. Utilizing LLMs to scan for adherence to architectural patterns, standards, and security policies is a logical evolution from the automated code scanning processes employed in relation to the code review of legacy vendor tools. Moreover, LLMs could potentially be expanded to enforce broader policy compliance across various organizational functions, thereby enhancing overall governance and risk management.

As we move into this new era of AI-driven development, a key challenge will be balancing the productivity gains of tools like autonomous agents with the growing need for robust, scalable defenses. While vibe coding may redefine how we build software, it also calls for a fundamental rethinking of how we protect it.

Read more like this

KumoVC: Turning Venture Data into Instant Predictions with KumoRFM

Originally published on Kumo.ai’s blog. In this technical blog post, Azin and…

Verticalized Voice AI – The Next Application Layer Shift

Every decade or so, a new interface reshapes not just how people…

From Static to Adaptive: Scaling AI Reasoning Without the Waste

2025 has been the year of reasoning models. OpenAI released o1 and...