Introducing Georgian’s “Crawl, Walk, Run” Framework for Adopting Generative AI

Since its founding in 2008, Georgian has conducted diligence on hundreds of technology companies, all at varying stages of their artificial intelligence (AI) journeys. We’ve also worked hands-on with the 45+ companies in our portfolio on their own AI journeys. To support our portfolio management activities, our research and development team has run bootcamps and hackathons, consulted on new product capabilities and open-source software libraries and conducted numerous applied research projects in partnership with our portfolio.

Despite this experience, the rapid transformation that generative AI (GenAI) has spurred for the tech industry over the last 12 to 18 months — and Large Language Models (LLMs) in particular — is unlike anything we have seen in the past 15 years.

Because GenAI is developing so quickly, for many firms, integrating AI into a software product can be challenging. With that in mind and based on our work with our portfolio companies, Georgian created a Crawl, Walk, Run framework to help provide guidance to companies from the initial stages of AI integration to more advanced levels of technical sophistication. The primary audience for this framework is product and engineering teams, along with CEOs of growth-stage startups adopting GenAI.

Our goal with this framework is to make it easier for companies to understand which technologies are appropriate for their stage of AI maturity and to help align executives and the development teams putting these technologies into practice.

As GenAI evolves, we’ll continue to validate our approach through bootcamps, hackathons and advisory sessions to ensure our framework remains up-to-date.

About Georgian’s hackathons and bootcamps

Hackathons and bootcamps are among the services we offer to companies we invest in. Our R&D team runs focused engagements with the goal of helping firms condense the timeline from ideation to implementation for their GenAI journey.

Hackathons: Two- to 10-day intensive engagements where our R&D team works with portfolio companies to solve specific product challenges.

Bootcamps: Hands-on tutorials on recent GenAI developments, followed by a hackathon where our portfolio companies build proofs-of-concept with support from our R&D team.

In this post, we’ll explore how to move through the stages of Crawl, Walk, Run, including:

- Techniques that technical teams may consider

- Business considerations for CEOs at each stage

- The potential business value of these techniques

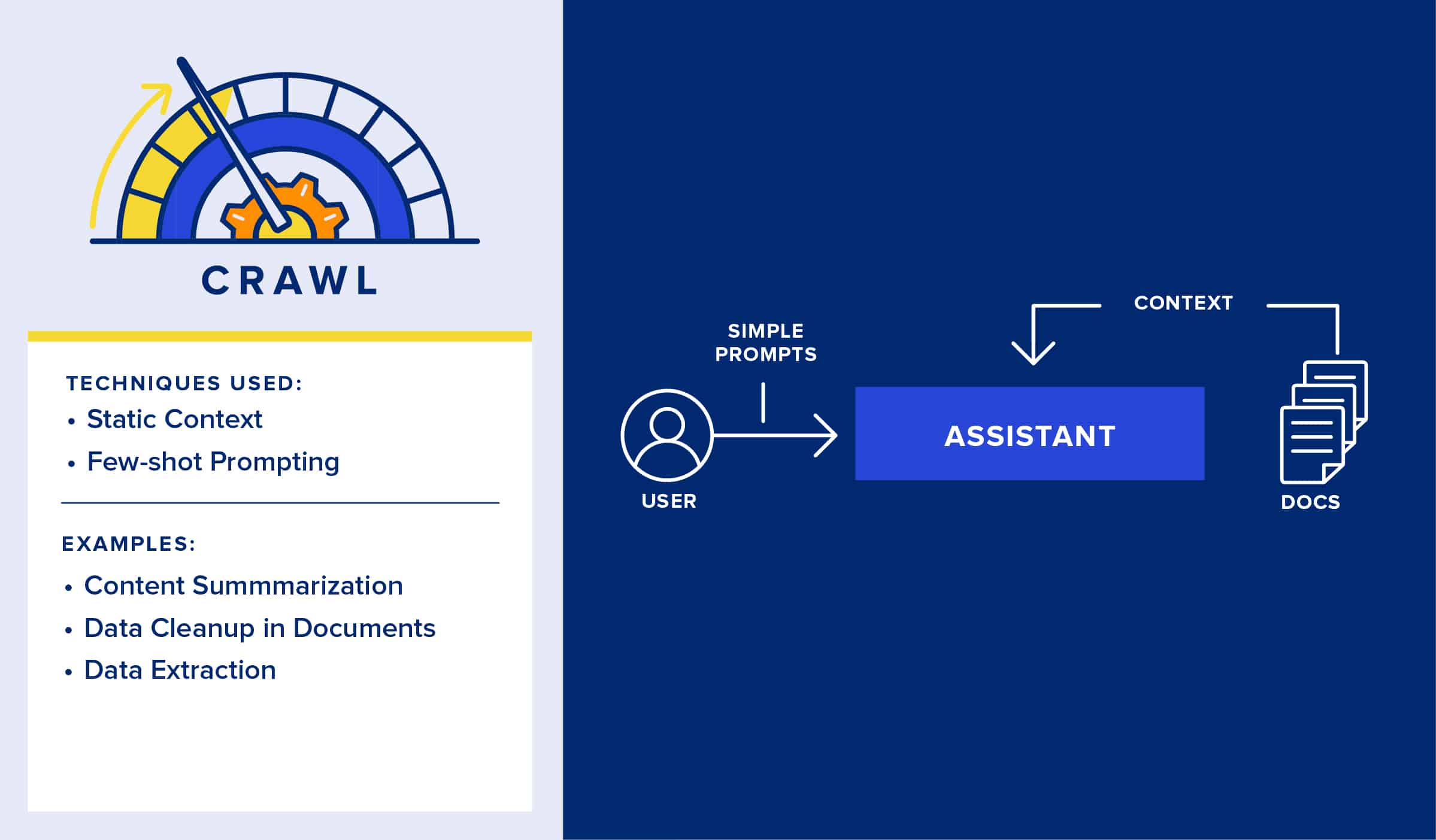

Crawl: Laying a Solid Foundation

The Crawl stage consists of becoming familiar with GenAI techniques and learning how they can be applied.

By understanding what GenAI is capable of, companies can define and focus on specific use cases that drive the business forward rather than tackling several complicated problems at once. Early experimentation can develop knowledge in GenAI to try and ensure that the company’s GenAI adoption is sustainable.

In our experience, firms can successfully move past the Crawl stage by focusing on an achievable but valuable use case. The use case should be simple enough to deliver in a short period and iterate.

Techniques for Your Development Team

Developers don’t have to use complex data science techniques to get started with GenAI. In the Crawl stage, we think companies should build familiarity with GenAI and test out use cases using basic prompting.

At this stage, companies can seek opportunities where LLMs can perform tasks like content summarization using straightforward prompts and fixed data.

In terms of developer tooling, teams can start with simple experiments in Jupyter notebooks. Many developers will experiment with GenAI directly with popular LLM APIs like those from OpenAI or Anthropic. However, using vendor-specific APIs may limit a team’s flexibility and access to the latest advancements.

We believe there are benefits to using programming frameworks that ‘wrap’ access to a range of LLMs like LangChain, Llama Index or Griptape from the start. These frameworks can, in our experience, make it easier to switch between different LLMs and connect to third-party applications, such as existing B2B software applications. Using an LLM framework can also simplify using more advanced techniques such as prompt chaining, Retrieval Augmented Generation (RAG) and software agents; these are concepts that we will discuss in the Walk and Run sections.

Retrieval Augmented Generation. Broadly speaking, RAG is a pattern of working with LLMs where a search or retrieval step is first made to locate relevant information, then that specific ‘context’ is passed to the LLM to generate a response.

For example, a query against a corporate database that contains customer records might pull a specific customer’s information and pass that to the LLM as context for a chat interaction with that customer.

For those who are not developers but want to be part of the experimentation process, tools such as OpenAI’s Playground — and for business users, ChatGPT itself — can be useful for interactive prototyping. These applications can provide quick feedback that helps users understand how to refine AI outputs during the early stages of development.

In terms of prompting techniques, at the Crawl stage, building a minimum viable product by using few-shot learning and basic prompt engineering to get more relevant LLM responses is typically a good starting point. The prompts should use static information that is unchanged across sessions or users, like inputting a detailed FAQ set into the LLM. For handling multiple inputs within a mostly static dataset, a straightforward solution like Meta’s FAISS can enable searching through a large set of documents efficiently.

In our view, it is beneficial to use the most capable LLM (as defined by LLM leaderboards such as Hugging Face’s Open LLM and Chatbot Arena Leaderboards) available to your team during the experimentation phase.

Once a team is confident in its ability to implement the desired use case with the more capable model, the team can test cheaper commercial models (e.g. Anthropic or GPT 3.5) or self-hosted open-source models (e.g. Llama 2 or Mistral) to see if cost can be reduced while still meeting quality and performance needs.

Even at this early stage, companies should align LLMs with business goals and establish basic safeguards. For example, we believe that developers building chatbot applications should guide LLMs to avoid offensive or irrelevant content through prompt instructions.

Considerations for CEOs

We think leadership can help teams stay focused by identifying opportunities where AI may be able to drive immediate efficiencies and cost savings. In our view, tasks that are simple but performed regularly make strong candidates for automation.

Leaders can help boost their company’s GenAI trajectory by equipping technical teams with tools, and the mandate, to explore different approaches to using LLMs.

To try and ensure experimentation turns into actual use cases, CEOs can structure GenAI projects so there’s a focus on end-to-end delivery of an internal or external product into production. For example, several firms we have worked with have delivered ‘Question and Answer’ chatbots that help employees better support customers or the wider business.

The opportunity at this early Crawl stage is getting familiar with the technologies and changes required to get GenAI ideas into production. From our perspective, there’s a significant distinction between the simplicity of developing GenAI prototypes and the complexity of advancing these prototypes to production-ready GenAI features.

Many of the challenges of getting GenAI solutions to market relate to the fact that LLM responses are non-deterministic. This non-deterministic nature means that asking the same question three times can get three different answers.

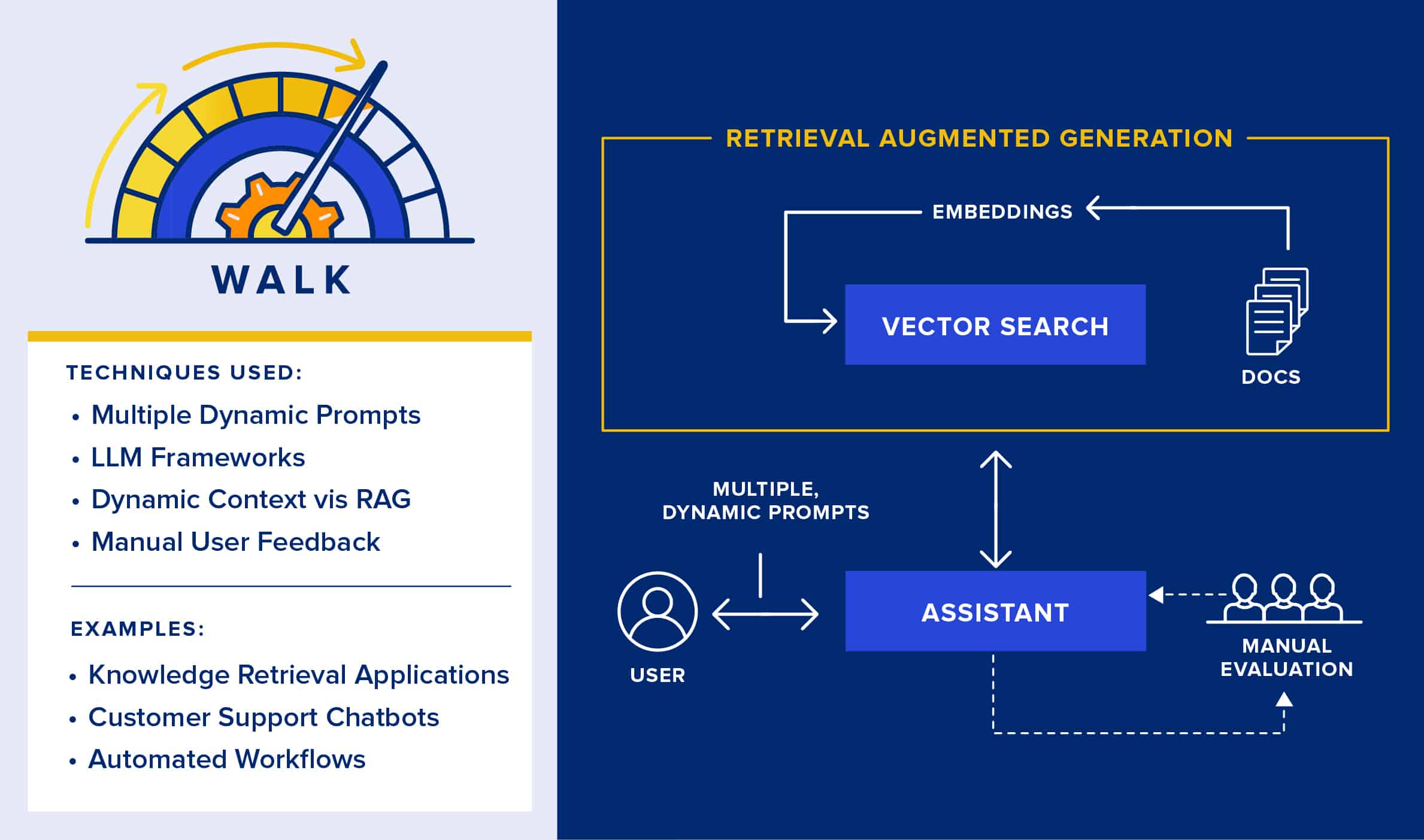

Walk: Striding Forward

At the Walk stage, companies move on to more advanced techniques such as integrating knowledge from other data sources via RAG, adding guardrails to keep an LLM ‘on topic’ and using more sophisticated prompting techniques such as prompt chaining.

Techniques for Developers

Teams operating at the Walk stage of GenAI maturity tackle more complicated use cases that may require multiple prompts while incorporating human feedback and changing context. Examples we’ve observed within our portfolio include implementing knowledge retrieval systems, such as fetching information from internal databases, and customer support chatbots.

Development teams at the Walk stage tend to use more sophisticated prompting techniques like prompt chaining, where a sequence of prompts are sent to the LLM to solve a task. Supporting this type of multi-step prompting is one of the key benefits of using an LLM application framework, such as LangChain, Llama Index or Griptape, which aims to simplify prompt chaining to create more sophisticated workflows versus directly communicating with an LLM.

Development teams may also experiment with A/B testing to evaluate the results of different prompts. Following the strategy of iterative prompt experimentation with A/B testing, the next step for development teams is enhancing their capabilities with tools. For example, we have helped Walk-stage portfolio companies use emerging debugging tools, such as LangSmith, that aim to simplify the task of developing and debugging LLM applications as a team.

To move applications beyond basic functionality and get higher quality outputs, we believe that inputs must incorporate organization or industry-specific data. We have helped a number of our portfolio companies incorporate that type of knowledge into LLM applications using various approaches to RAG.

By using RAG, outputs from a company’s LLM application can include specific information and knowledge from organizations, increasing the relevancy of the outputs. At the Walk stage, in our view, companies should consider starting their RAG journey using a vector database to index unstructured text data.

From our perspective, teams should focus initially on incorporating non-sensitive data into vector databases. This focus on non-sensitive data is because, at least for the time being, vector databases are unlikely to maintain the same level of information and privacy controls found in existing information systems such as relational databases. In other words, once the information is loaded into the vector index, it becomes available to anyone using the new LLM application.

Once a vector index is available, LLM applications can use powerful similarity searches to find relevant information within the data to pass to the LLM. For example, a user might load a company’s detailed product information into a vector database and then allow a chatbot application to search for relevant answers using a vector search, passing relevant results back to the LLM to generate an answer to the customer’s question using the most contextually relevant parts of the product documentation.

We believe that the Walk stage is also a good time to incorporate continuous monitoring and feedback from the user, such as providing the means to give basic “in-line” feedback via a thumbs up (or down). For example, this could be done within a chatbot after each response, or it could be done at the end of a task such as summarization.

Considerations for CEOs

Organizations at this stage may consider tackling sophisticated projects that leverage AI’s capability for simplifying/automating multi-step processes. Examples may include optimizing internal workflows and operational processes or providing deeper insights into market trends and consumer behavior through language analysis.

At the Walk stage, teams are likely pulling in organizational knowledge via RAG, enabling the language model to answer questions with specific context about your products, organization or customers. This approach helps make responses more relevant to a business and to specific customer or organizational data, increasing the utility of GenAI applications.

However, as teams use RAG to add that context, it’s important, in our view, to consider implications on information security and privacy. In our experience, vector databases are not set up “out of the box” to maintain the same information controls as many of the databases and other systems from which the data would be sourced. Teams should therefore be wary of bulk-indexing organizational information in a vector index. A potential solution, discussed in the next section, is to pull sensitive data directly from existing information systems in the retrieval step, rather than relying on loading all information into a new vector store.

Teams at this stage may debate whether they should be using a development framework such as LangChain. We see the complexity and stability of these open-source frameworks, which are evolving quickly, as concerns. However, in our experience, using an LLM framework helps teams move faster and reduces complexity in the long run compared to teams having to create their own equivalent functionality that includes integrations with third-party software, document handlers, prompt workflow management, chatbot memory and agent functionality.

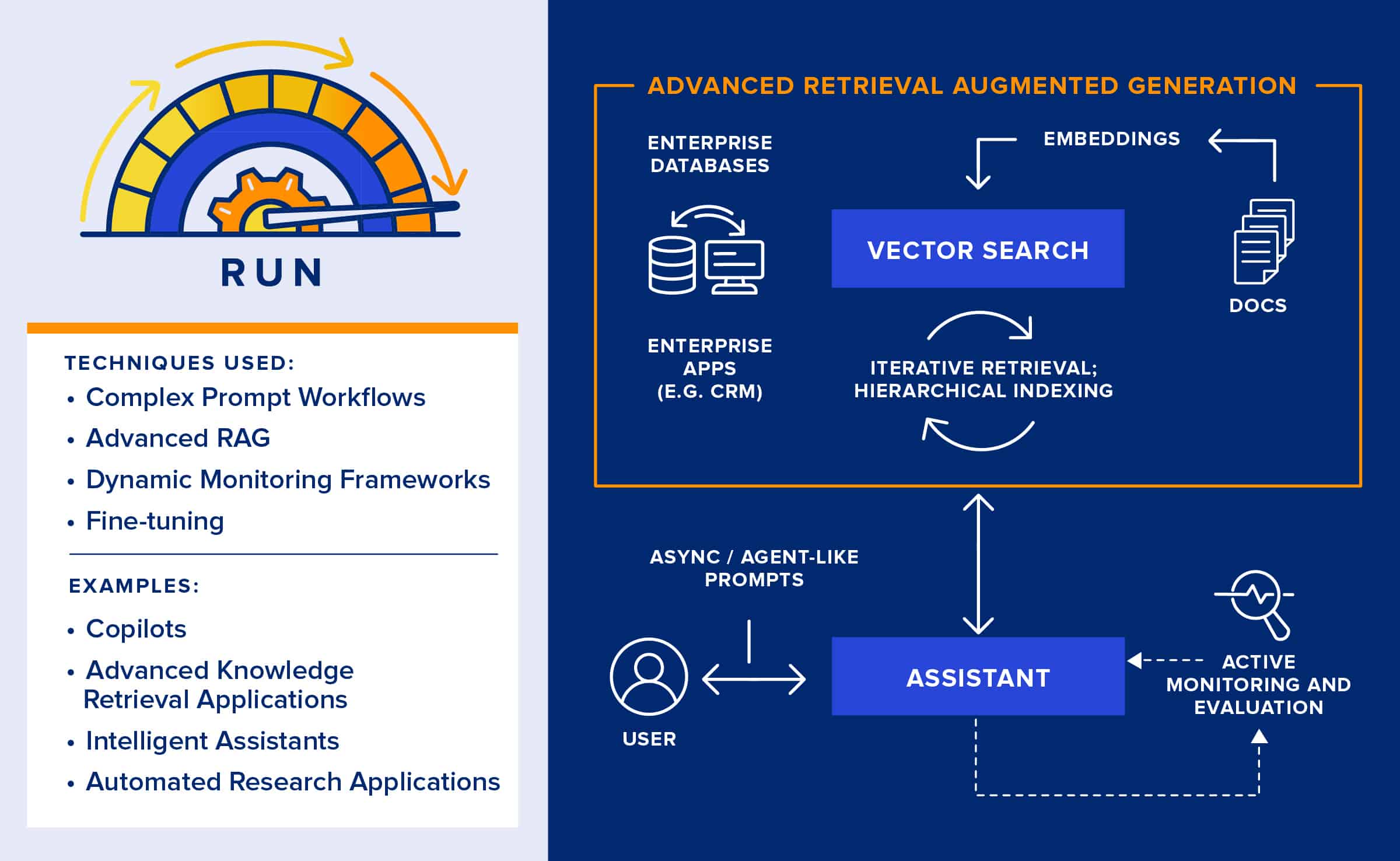

Run: Hitting Full Pace

At the Run stage, we observe teams starting to use advanced techniques— such as providing dynamic context to the LLM application — the use of more advanced RAG techniques and longer-running agent-style asynchronous processes.

In addition to RAG, companies may also explore fine-tuning models to incorporate company or domain-specific knowledge into the language model itself.

Techniques for Developers

At this stage, development teams may incorporate longer-running, asynchronous LLM processes as part of their applications. LLM application frameworks, such as LangChain, provide some type of agent capability. Agents use an LLM to reason about tasks, execute those tasks and make decisions without necessarily having direct human oversight, enabling more complicated use cases.

Additionally, agent frameworks such as Microsoft’s Autogen and Semantic Kernel provide more experimental agent capabilities, such as multi-agent interactions. Example applications of this technology include doing open-ended web research on behalf of a user or proactively managing tasks in a project management system. In our view, for now, teams should be curious but cautious when it comes to LLM agents due to the unpredictable nature of LLM reasoning that can generate unexpected results.

Based on our experience to date, we believe that teams should actively monitor LLM responses for relevance and tone. This monitoring may be particularly important where long-running, autonomous agents are reasoning and prioritizing tasks. Monitoring can help LLM responses stay within predefined boundaries such as preventing a web research agent excessively scraping irrelevant websites during research tasks.

At this level of maturity, how teams implement RAG may become more sophisticated in several ways. For example, teams might use hierarchical indexes where summaries of documents are made searchable in addition to the full documents. Developers might also use recursive or iterative RAG where related terms or concepts are automatically searched for as part of the retrieval process, in addition to the original retrieval query.

In addition, teams may expand beyond pure vector use cases and integrate data in enterprise sources such as data stored in SQL databases or other enterprise information systems. For example, retrieving context directly from a Customer Relationship Management (CRM) system may help maintain information privacy and security policies compared with bulk-loading information into a vector index.

Teams may also consider fine-tuning a foundational language model, like Meta’s Llama 2 model, with specific knowledge using techniques like LoRA. For example, teams could train Llama 2 to generate code in a preferred corporate style. Once again, we think it is important to consider privacy and security of the training data, as once trained, the model’s knowledge could become accessible to a broader audience than intended (anyone using the LLM). More information on fine-tuning models can be found in Georgian’s series on the topic here.

Reinforcement Learning with Human Feedback (RLHF) may also be applicable to help improve model performance by reducing erroneous answers and reducing bias and hallucinations. In Reinforcement Learning (RL), a model ‘learns’ through interactions with its environment (e.g. the user), gaining ‘rewards’ which act as feedback signals to the RL agent. Human Feedback (HF) can be used to inform the reward model, with human annotators ranking or scoring model output. This interaction could look like upvote or downvote buttons on a model interface.

However, RLHF is not a perfect solution as collecting human feedback in a clean and standardized way can be difficult and time-consuming. Another downside of RLHF is model drift, as seen in 2016 when Twitter users corrupted Microsoft’s Tay chatbot in less than 24 hours.

Considerations for CEOs:

LLM-powered software agents offer a potentially transformative approach to building applications; however, their relative lack of maturity and non-deterministic nature might pose too much risk for certain use cases and industries. We believe that, at this time, teams can explore the use of intelligent agents with an experimental mindset while being cautious about widespread use in production settings.

Why LLM agents differ from traditional software

We believe that one of the important differences between LLM-enabled software agents (and any other software that uses an LLM) and more traditional types of software is that the behavior of the system is probabilistic. That probabilistic nature means that the output is subject to some chance and variation, rather than being consistent because it’s based on fixed rules or logic. For example, an agent, given the same task five times, might solve the task in five different ways. While each solution is likely to be similar, there is a high likelihood that there will be some differences.

Executives should ensure that information assets, when used in model fine-tuning and RAG, comply with existing security and privacy policies, laws and standards, and should prioritize protecting customer data and intellectual property in their LLM applications. Users should not be able to access sensitive information via an AI application, so teams should avoid simply loading information into vector databases for LLM users.

To ensure development teams consider laws, regulations, business policies and objectives, we believe that teams should implement strong oversight mechanisms (e.g. security reviews and adversarial testing) for GenAI projects and push teams to proactively introduce ‘AI guardrails’ into their product development thinking.

How Crawl, Walk, Run may change in 2024

The GenAI landscape is evolving rapidly, offering opportunities and challenges for development teams and technology CEOs leading organizations adopting these technologies. As GenAI matures, we expect our Crawl, Walk, Run framework will continue to evolve through 2024. Already we are seeing some of our portfolio companies use more advanced techniques earlier in their GenAI journeys than we had originally anticipated. We plan on continuing to update our framework.

With that in mind, here are a few areas of GenAI development we will be tracking in 2024.

Open-source LLMs. Throughout 2023, there were a number of significant open-source model releases including Falcon, Llama 2 and Mistral. We look forward to seeing if the pace of open-source model releases will continue and are monitoring any developments in 2024. We are aiming to make it easier for teams to use and evaluate the performance of open-source models via our LLM Fine Tuning Hub project on github.

LLM development tooling. As LLM apps continue to move from experimentation to production, in our view, developer tooling will continue to evolve. For example, our R&D team has been evaluating a number of different tools for debugging, testing and evaluating LLMs as part of the development process, including LangSmith from LangChain.

Multi-agent applications. Georgian is actively experimenting with various frameworks, including LangGraph and Microsoft’s Autogen, that provide multi-agent capabilities. These newer frameworks enable developers to create multiple agents, each potentially utilizing a different LLM and different tools, which are then able to ‘collaborate’ to solve a particular problem. While experimental, we are watching this space closely and will continue to update our Crawl/Walk/Run framework as these technologies mature.

LLM application guardrails. As more LLM-enabled applications make their way toward production, we are actively tracking technologies that can be used to help keep these applications on the right path. Such tools, often called ‘guardrails’, are being integrated into programming frameworks such as LangChain. We expect the capabilities to continue to develop as more organizational and legislative requirements are placed on AI-enabled applications.

As GenAI evolves, Georgian will update our thinking within the Crawl, Walk, Run framework. Our goal is to provide product and company leadership with a guide for navigating the GenAI landscape.

Read more like this

Agentic AI and the Rumors of SaaS’s Demise

Introduction: A Conversation About Big Shifts When I joined Tobias Macey on…

Agentic AI: Adoption insights from 600 executives

91% of R&D Respondents have implemented or are planning to implement agentic…

Why Georgian Invested in Island (Again)

Island, the developer of the Enterprise Browser, emerged from stealth in early…