Seven Principles of Building Fair Machine Learning Systems

I recently gave a lecture for the Bias in AI course launched by Vector Institute for small-to-medium-sized companies. In this lecture, I introduced seven principles of building fair Machine Learning (ML) systems as a framework for organizations to address bias in Artificial Intelligence (AI) systematically and sustainably and go beyond the desire to be ethical in deploying AI technologies.

We’ve all heard examples of unfair AI. Job ads targeting people similar to current employees drive only young men to recruiter inboxes. Cancer detection systems that don’t work as well on darker skin. When building these machine learning (ML) models, we need to do better at removing bias, not only for compliance and ethical reasons but also because fair systems earn trust, and trusted companies perform better.

All companies have an opportunity to differentiate themselves on trust. The most trusted companies will become leaders in their market as customers stay loyal for longer and are more willing to share data, creating more business value and attracting new customers. Even at startups, with limited resources spread thin, it’s still essential to establish trust now. That’s why trust is one of our key investment thesis areas. Our trust framework has different pillars: fairness, transparency, privacy, security, reliability and accountability. I’m excited to share more about how we help our portfolio companies to create fairer AI solutions.

We care about fairness because we know machine learning models are not perfect. They make errors, just like us. In addition to inheriting bias from historical human behavioral data and inequalities in society, we’re mapping probabilistic outcomes from ML models to deterministic answers, which may also introduce some errors. When we talk about fairness and bias, we’re concerned about these errors impacting specific subgroups more than others.

Case Study

Let’s look at a case study. Many schools use Turnitin to detect plagiarism. Recently, market research identified an emerging type of cheating: students paying others to write their essays. To detect this contract cheating, Turnitin built a model using advanced machine learning, natural language processing (NLP) techniques and image analysis. The model considers differences in writing style between a student’s previously submitted essays and the essay in question and predicts whether a student has cheated or not. Turnitin doesn’t use any demographic or student performance data to train the ML model. However, there were still reasons for us to be concerned about possible bias in the final predictions.

We’ll explore this contract cheating case study alongside our seven principles for building fair ML systems to bring them life. We hope that the principles, questions to ask and checklists we’ve included below will help guide you as you build your own fair AI system.

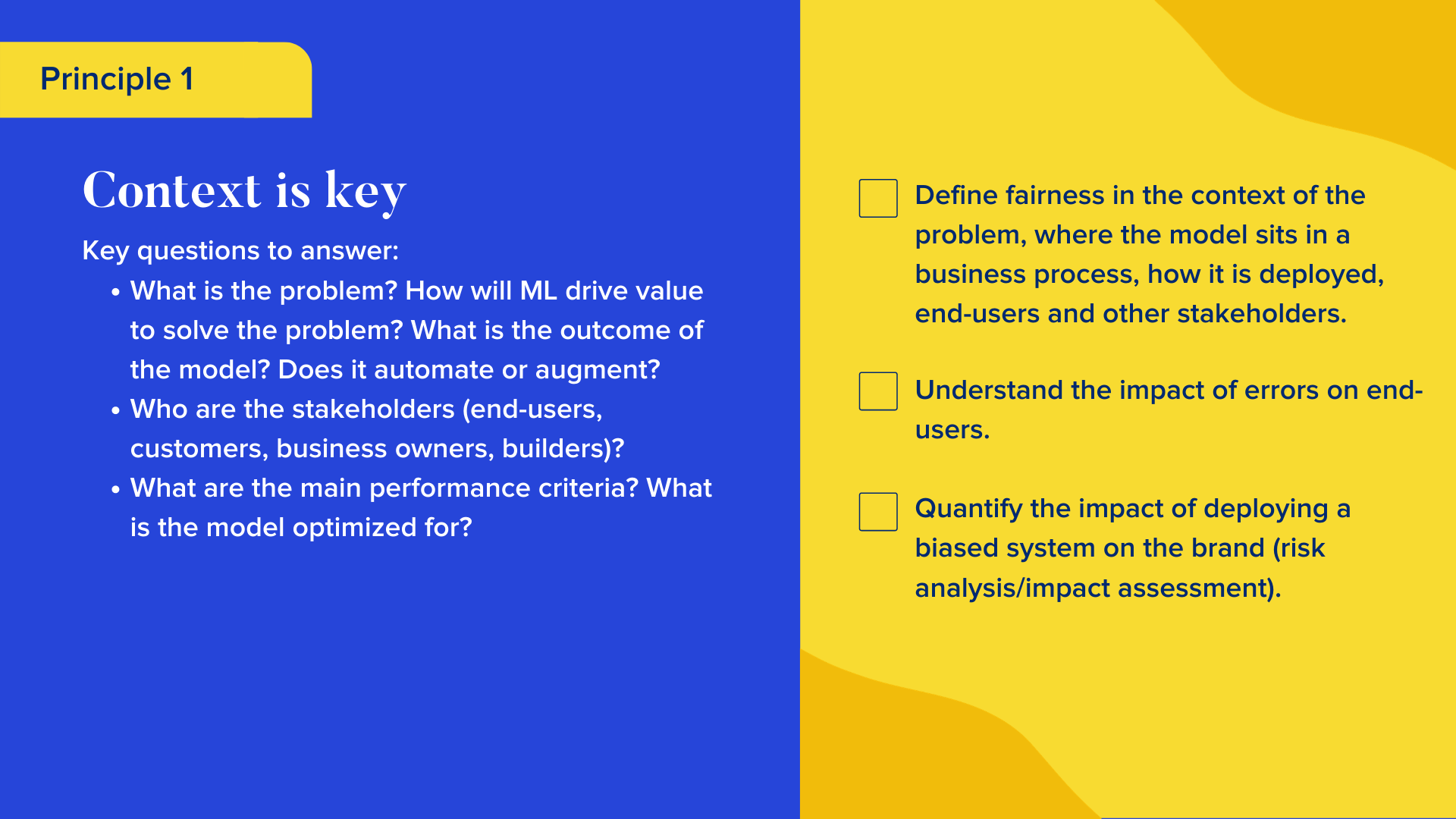

1. Context is Key

We can’t define fairness without understanding the problem and how ML will solve the problem. Fairness is defined within the context of the problem:

- Where the model sits in the business process.

- How it’s deployed.

- Who the end-users and other stakeholders are.

With Turnitin, we first sought to understand whether contract cheating detection should be fully automated or used to augment teachers and investigators. We also had to determine the main performance criteria and what the model optimizes for—maximizing for true positives or reducing the number of false positives. Is precision more important than recall or vice versa?

The model’s output was likelihoods, rather than “cheating” or “not cheating,” so we wanted to include explanations and provide evidence. We also had to determine the tolerance for error.

When you put a proposal for an AI product in front of your CEO, think about quantifying and discussing the brand impact if the model discriminated against minority groups. You can follow a similar assessment as you would for security and privacy risks. Consider what the effect of errors and bias would be on end-users and customers. In Turnitin’s case, this risk was high. Someone accused of cheating on their assignment may fail or be expelled. Mistakenly accusing a student of cheating may also tarnish the school’s reputation.

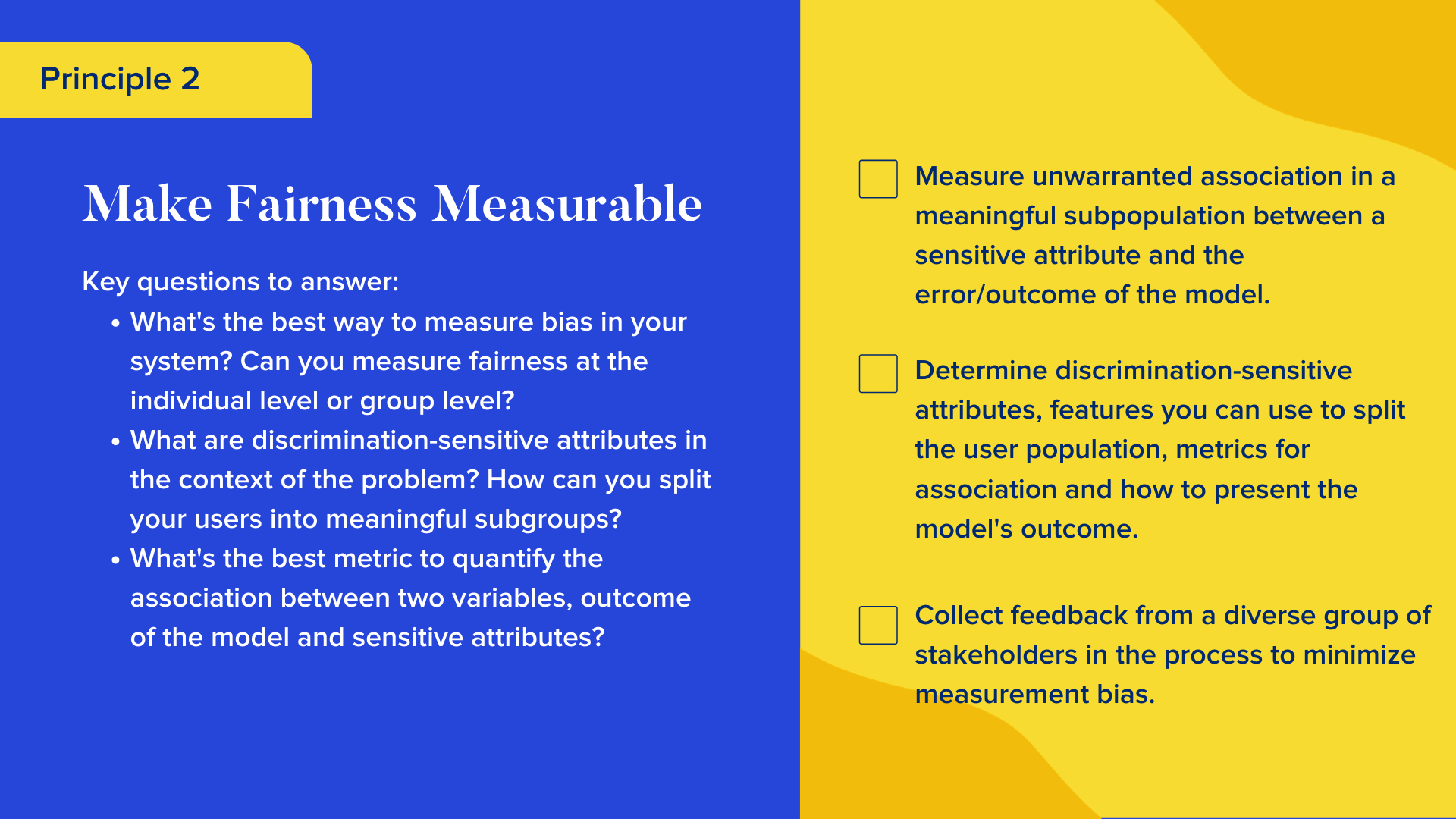

2. Make Fairness Measurable

Just as it’s essential to measure the performance of ML solutions before deploying a model, you’ll want to measure fairness too. However, defining what is considered bias and making it measurable is challenging.

Some metrics to measure fairness in ML have recently been proposed (mainly for classification purposes), such as individual fairness, group fairness, demographic parity, and equality of opportunity. To measure any unwarranted association between a sensitive attribute and the model’s error in a meaningful subpopulation, you need to identify discrimination-sensitive attributes and how to split users into subgroups. To get there, collect feedback from diverse stakeholders and user base representatives to minimize measurement bias.

In the Turnitin project, we thought ethnicity and gender might be the most critical discrimination-sensitive attributes. Then we looked at socioeconomic status. We also considered student performance: are we discriminating against students that aren’t performing well in the course? Then, subject matter: do literature majors have more false positives than history or engineering? I’m a non-native English speaker, and the biggest question for me was: does our model have any bias against non-native English speakers?

Often, we don’t have access to discrimination-sensitive attributes to measure fairness. Turnitin, for example, doesn’t hold demographic information—which was great because this information wasn’t in the model—but it made it more challenging to measure bias or fairness. We used names to approximate potential ethnicity and gender so that we could test the model. We also used open-source tools to predict native language based on the text. We only used this tool to test for fairness, and we didn’t use the information for training our models.

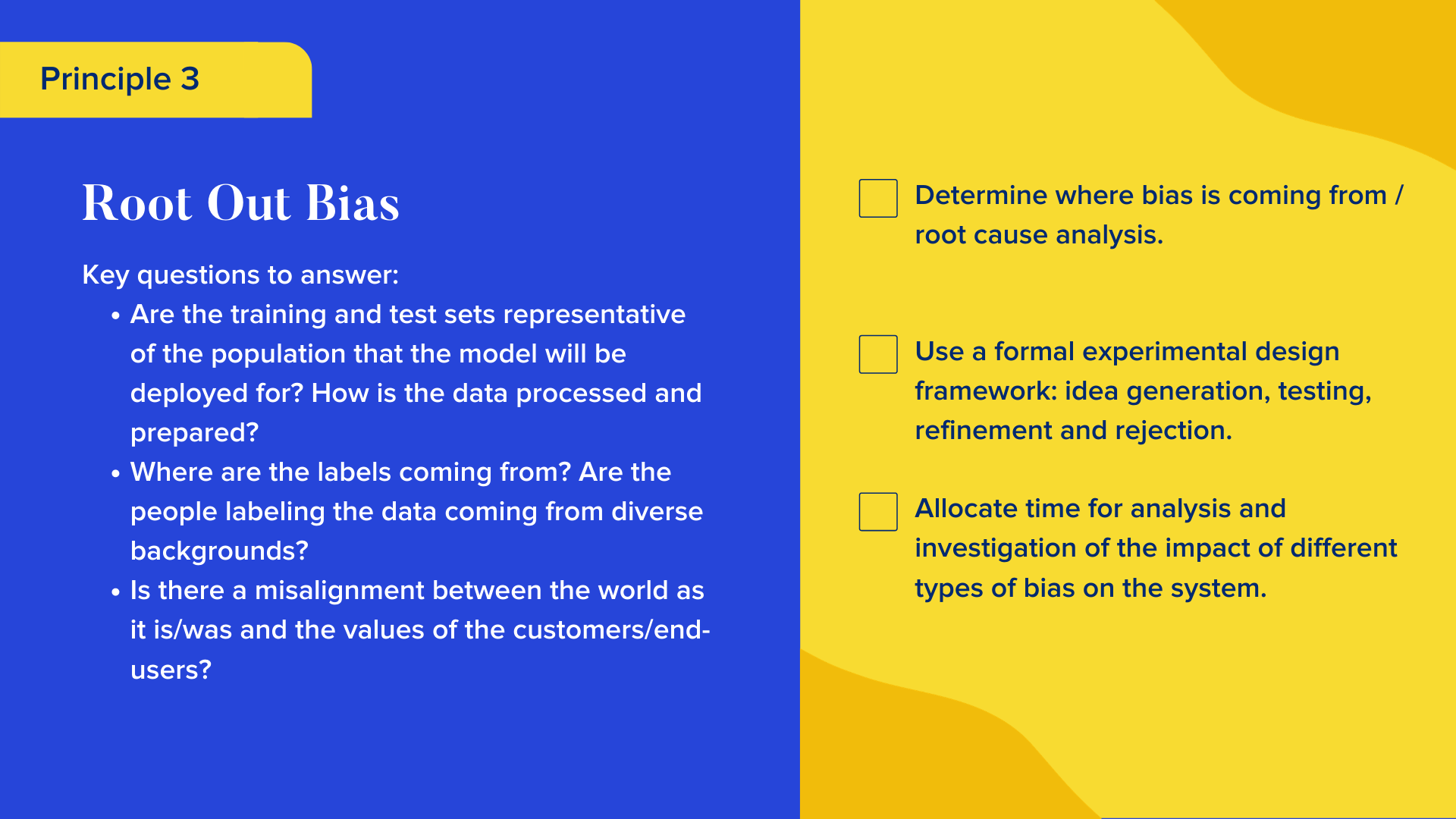

3. Root Out Bias

Once we’ve determined the possible sources of bias in our ML system, we need to figure out how to root them out. Six sources of downstream harm in ML models are introduced in the Framework for Understanding Unintended Consequences of Machine Learning: historical bias, representation bias, measurement bias, aggregation bias, evaluation bias and deployment bias. The key takeaway is that bias can be introduced at different development and life cycle stages of ML products.

A good example is cancer detection solutions using computer vision. Various studies show they don’t perform at the same level for Black patients. When researchers started to look more closely into the source of the bias, it turns out that Black people have different cancer patterns. When the training data included only people with paler skin, it missed those data points, and researchers missed this in their tests, so they never realized there was a problem in their system.

There is also the question of where labels come from. Often they come from historical human behavioral data, and sometimes we ask humans to label data for us. We know humans make errors and have different kinds of cognitive biases. For example, different annotators may have completely different perceptions in moderation projects when labeling what counts as toxic language.

Finally, the world as it was (which we see in our datasets) is often different from the values of our customers and end-users. Let’s look at a fictional example of employee churn. Say we want to build a system for large organizations to predict which employees will leave in the next six months. The system may help managers to provide incentives to stay longer, but may also lead to the employees being overlooked for promotion if they are seen as at risk of leaving. Say the model we build predicts 90 percent of the employees leaving are women. Historically, women have quit their job more often than men to support their families. The model might pick up these attributes (or other attributes correlated with gender) as the primary indicator of employee attrition. In this example, the world as it was and the future we want, where women are more involved in the economy, are misaligned.

We recommend using a formal experimental design approach to root out these sources of bias, starting with idea generation, hypothesis testing, refinement and rejection. Ensure you allocate enough time to investigate different types of bias and their impact on your system.

In our contract cheating example, we identified that bias against non-native English speakers came from our sampling technique and how we generated the initial training set.

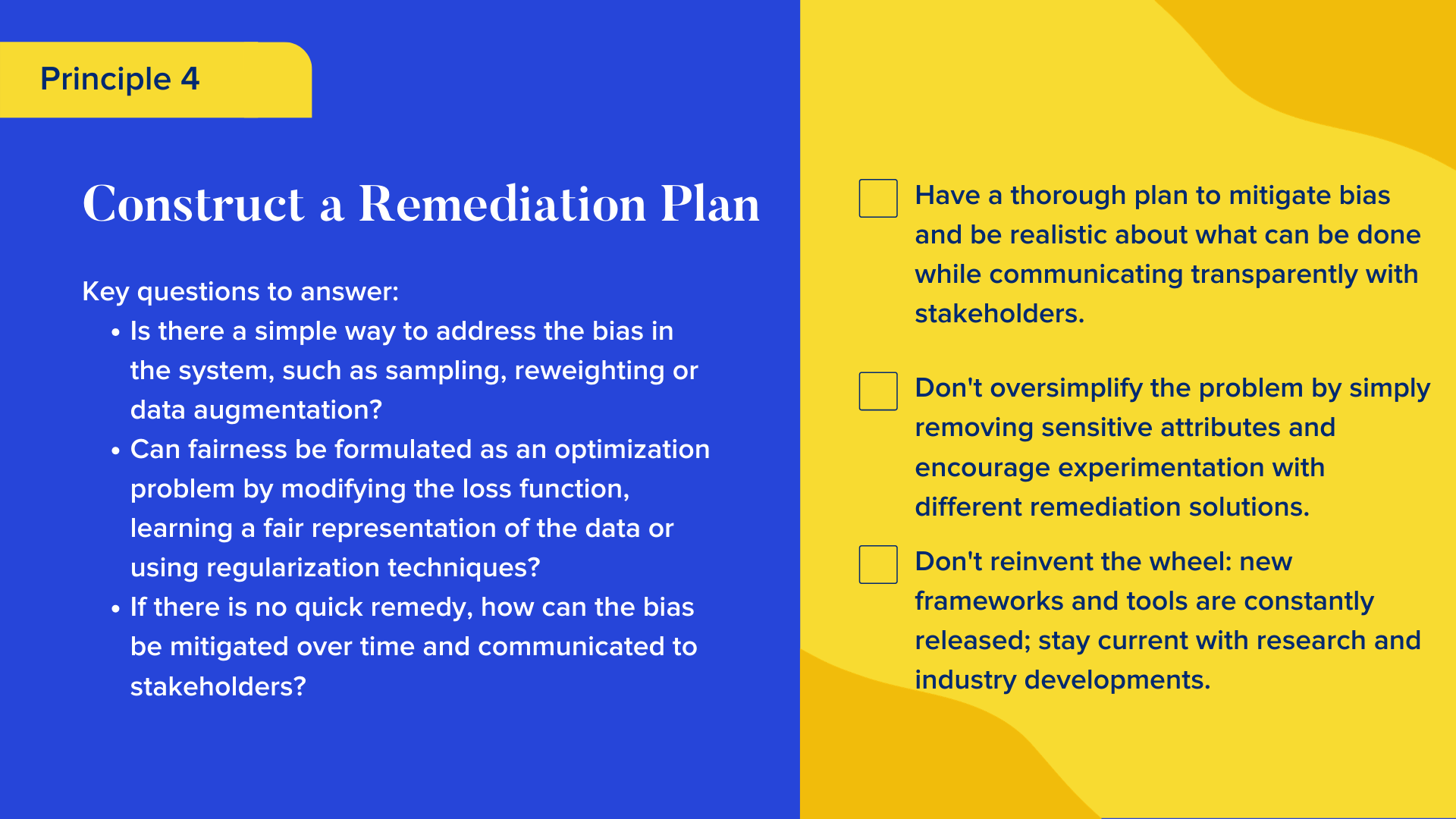

4. Construct a Remediation Plan

Based on our initial root cause analysis, we can start with simpler ways to address bias in the system, such as using existing research tools or working on the sampling, reweighting or data augmentation. The other avenue available to us is to formulate fairness as an optimization problem by modifying the loss function, learning a fair representation of the data or using regularization techniques.

For our contract cheating problem, we were dealing with a hugely imbalanced data problem. We didn’t have as many historical cases of contract cheating, so we had to determine how to generate more positive samples. We augmented our training data by generating examples of contract cheating using two different students’ similar essays, labeling them as a contract cheating case. We realized, given the bias against non-native speakers, we could fix the bias in our sampling for our train and test set by picking essays from native speakers when creating and synthesizing positive contract cheating cases.

We were able to find a pretty simple solution. Sometimes there is no solution, or it takes time. There is no perfection. We know ML models make errors, so it’s a matter of focusing on bias and improving over time. When Google Computer Vision deployed and labeled some humans as gorillas, the development team removed gorillas from potential labels as a quick solution. This solution addresses the immediate problem, but not the source; it merely treats the symptoms. You should have a short-term and long-term mitigation plan to fix the root cause.

If there is no quick remedy, it’s essential to have a plan to mitigate bias and be realistic about what you can do while communicating transparently with the stakeholders. The cancer detection system we discussed earlier, for example, does bring value. Still, we must tell doctors these systems are making errors for people of color and prioritize data acquisition for people of color to address the data gap.

Don’t oversimplify the problem and say, “I’m not using demographic data. I’m fine.” That’s the biggest mistake any data scientist can make. There is a correlation between those sensitive attributes and other attributes, and there are many different sources of bias in an ML solution.

5. Plan for Monitoring and Continuous Quality Assurance

When you deploy your ML solution, you’ll want to monitor and measure biases in production. Your system’s performance may be different from what you expected.

There may be unanticipated edge cases, drift in the input distribution, or individuals and groups not well-represented in the training data may use the solution in production. Decide how to monitor these potential cases and alert the development team if your model bias metrics exceed your pre-defined thresholds—another reason for making fairness measurable and identifying the right metrics early on. To make sure this step doesn’t fall through the cracks, document who is responsible for this continuous monitoring and quality assurance and who’s accountable if anything goes wrong.

6. Debug Bias Using Explainability

Bias bugs, just like security and privacy bugs, are hard to identify. We need tools enabling us to debug bias, even if they’re still not perfect. ML explainability techniques are the best tools for developers and auditors to identify unwanted bias in ML products.

As AI techniques become increasingly complex, data scientists have to figure out how to explain the choices of their models instead of simply assuming that users are happy to trust a black box. We need to help users of machine learning understand how our models make predictions. By helping to raise awareness, we’re also holding ourselves accountable for the systems we develop.

The field of explainability is changing rapidly, and new tools like SageMaker Clarify, FairTest and SHAP (SHapley Additive exPlanations) appear all the time. Development teams can leverage existing techniques, tools and frameworks to debug systems.

In our project, we realized teachers and investigators expect the system to provide more than a probability of cheating; they need supporting evidence. We created a user interface that highlights why the model made its prediction and any potential biases and provided a list of questions to help the investigators conduct their investigation.

7. Reduce Bias by Building Diverse Teams

Bias in AI often traces back to the people developing the systems. There are many examples of the consequences of the absence of women and under-represented groups in AI development, such as the first release of Siri that couldn’t recognize women’s voices. When solving complex problems, there are so many assumptions and hypotheses that it’s hard to have a comprehensive view of the challenge without having a diverse product and R&D team.

Successful teams bring diverse voices into the design and development process, through gender, ethnicity, and diversity in background and skills. As a data scientist, you can champion diversity and transparency in your culture and ensure there are mechanisms for individuals, whether employees or end-users, to safely voice concerns over potential bias in your algorithm’s output.

Conclusion

The steps laid out in these seven principles of building fair ML systems can be challenging and take time. You have to communicate the plan and the importance to business stakeholders. Even if you can’t address all your bias problems right away, create a plan to mitigate some of these biases in the next version of your products and solutions.

Keep these principles in mind when building your own AI products. Users will be more likely to trust your product, you can become a leader in your market, and you will have a more positive impact on the world.

Georgian’s frameworks and tools help our portfolio companies create fair AI products. To learn more about differentiating your AI-based product on trust, read more here.

Read more like this

Agentic AI and the Rumors of SaaS’s Demise

Introduction: A Conversation About Big Shifts When I joined Tobias Macey on…

Why Georgian Invested in Island (Again)

Island, the developer of the Enterprise Browser, emerged from stealth in early…

Why Georgian Invested in Render

We are pleased to announce Georgian’s investment in Render’s $80M Series C…